Microsoft Azure uses Role's to define who can access what - Role-Based Access Control (RBAC).

You may be familiar with some of the more common ones, such as:

Behind the scenes, each role is a separate grouping of permissions that determine what level of permissions someone or something has in Azure; these permissions are usually in the form of:

Each role can be assigned to a specific Resource, Subscription, Management Group or Resource Group through an 'Assignment' (you assign a role if you give someone Contributor rights to a Resource Group, for example).

These permissions can be manipulated and custom roles created.

Why would you use custom roles you ask? As usual - it depends!

Custom Roles can give people or objects JUST the right amount of permissions to do what they need to do, nothing more and nothing less, an example of this is maybe you are onboarding a support partner, if they are will only be supporting your Logic Apps, WebApps and Backups, you may not want them to be able to log support cases for your Azure resources; instead of attempting to mash several roles together that may give more or fewer rights than you need, you can create a custom role that specifically gives them what they need, you can then increase or decrease the permissions as needed, however, if a built-in role already exists for what you want. There is no need to reinvent the wheel, so use it!

I will run through a few things to help arm you understand and build your own Custom Roles, primarily using PowerShell.

Install the Azure PowerShell Modules

As a pre-requisite for the following, you need to install the Azure (Az) PowerShell Module. You can skip this section if you already have the PowerShell modules installed.

-

Open Windows PowerShell

-

Type in:

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser

Install-Module -Name Az -Scope CurrentUser -Repository PSGallery -Force

-

If you have issues installing the Azure PowerShell module - see the Microsoft documentation directly: Install the Azure Az PowerShell module.

-

Once you have the Azure PowerShell module installed, you can connect to your Azure subscription using the little snippet below:

#Prompts for Azure credentials

Connect-AzAccount

#Prompts Window allowing you to select which Azure Subscription to connect to

$subscriptionName = (Get-AzSubscription) | Out-GridView -Title 'Select Azure Subscription' -PassThru | Set-AzContext -SubscriptionName $subscriptionName

Export Built-in Azure Roles

One of the best ways to learn about how an Azure Role is put together is to look at the currently existing roles.

-

The following PowerShell command will list all current Azure roles:

-

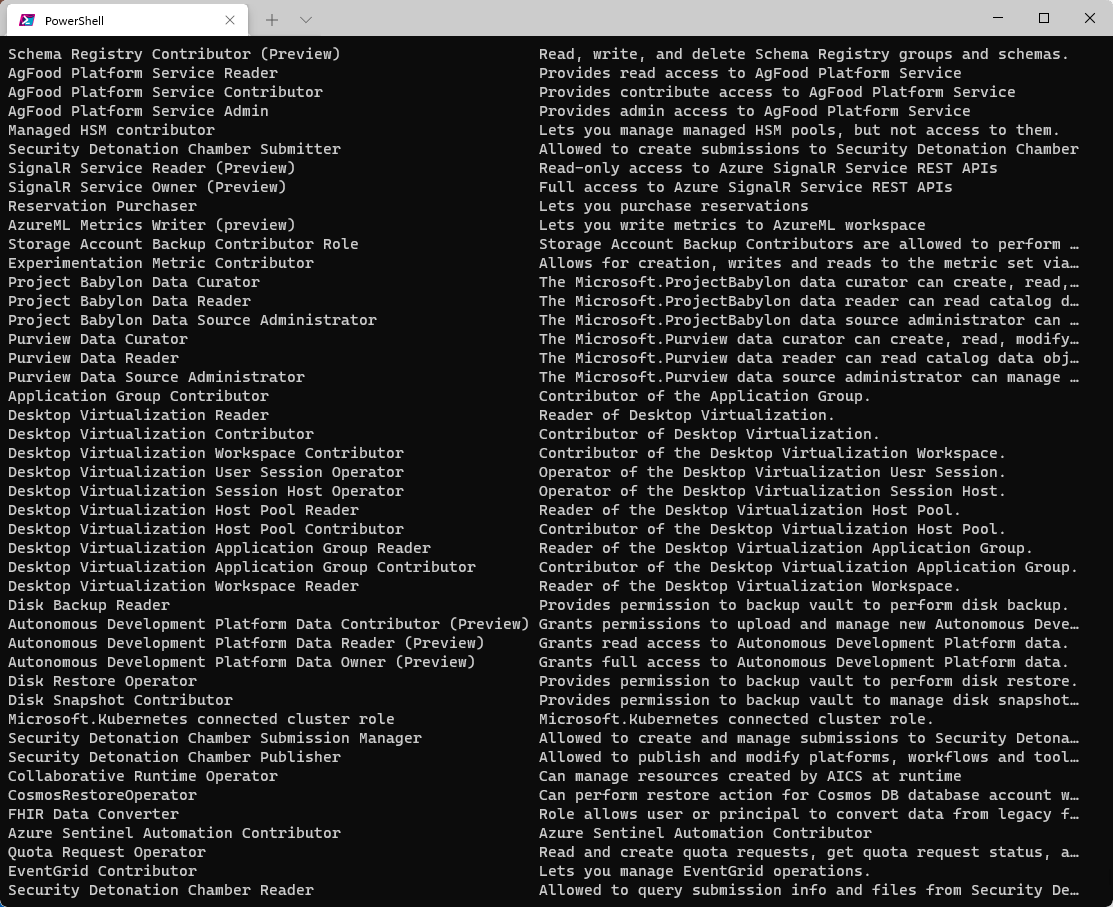

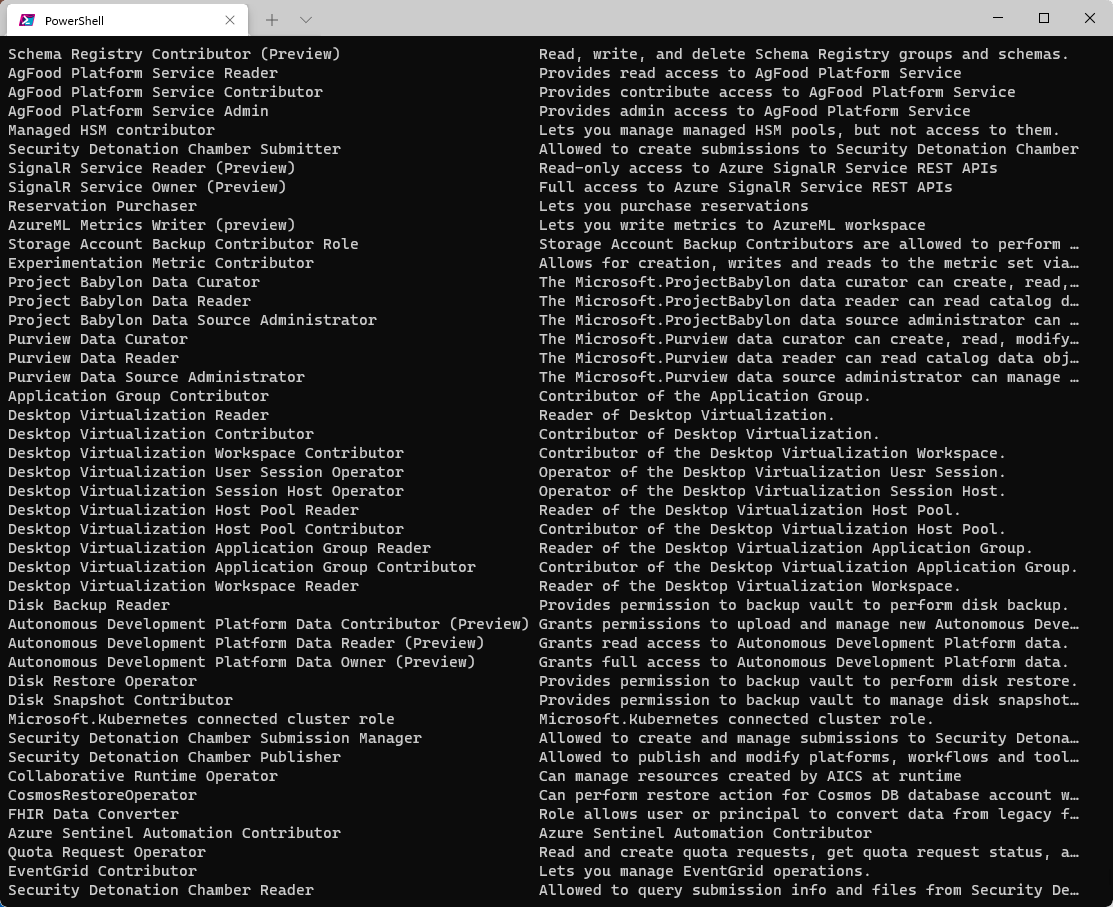

For a more human-readable view that lists the Built-in Azure roles and their descriptions, you can filter it by:

Get-AzRoleDefinition | Select-Object Name, Description

-

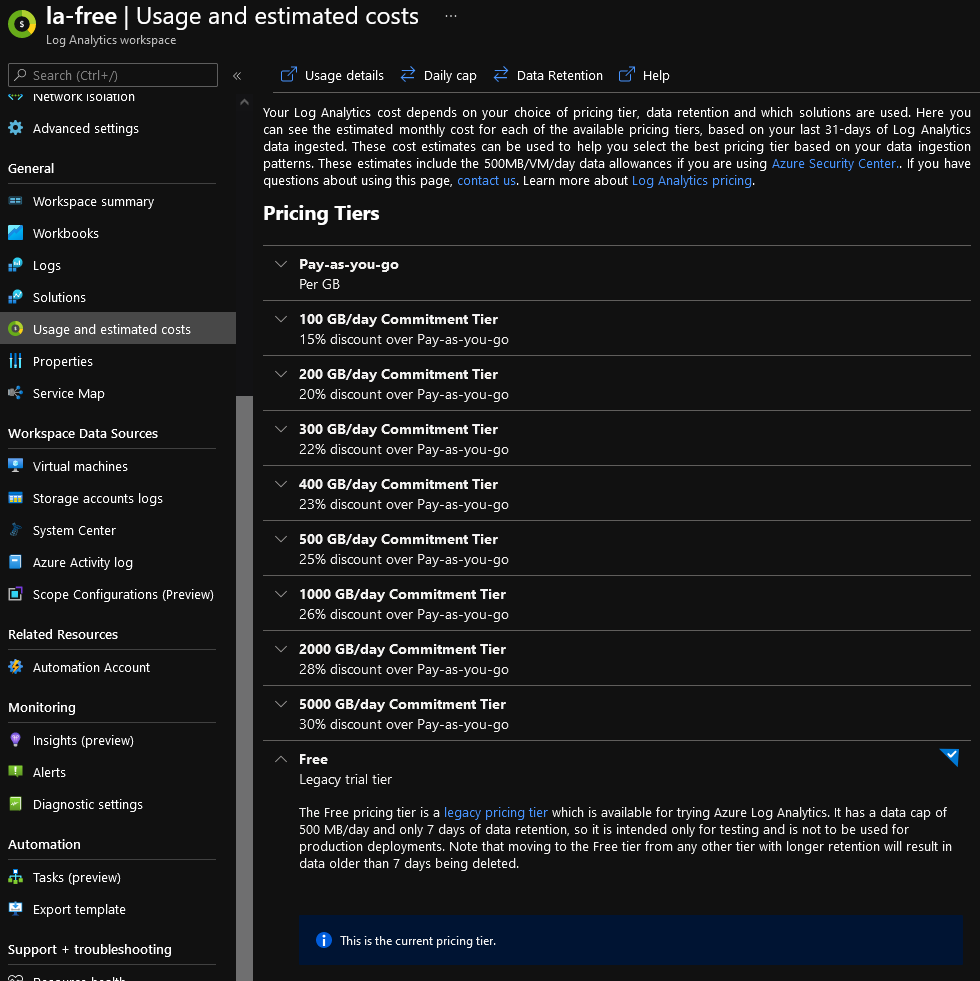

As you can see in the screenshot below, there are many various roles, from EventGrid Contributor to AgFood Platform Service and more! At the time of this article, there were 276 built-in roles.

-

-

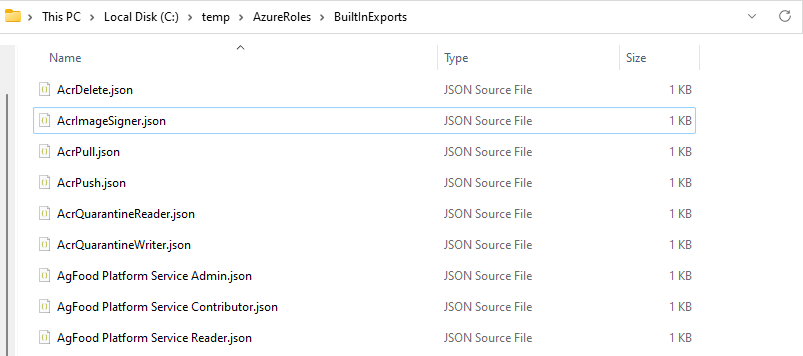

Now that we have successfully been able to pull a list of the existing roles, we will now export them as JSON files to take a proper look at them.

-

The PowerShell script below will create a few folders on your computer as a base to work from (feel free to change the folders to suit your folder structure or access rights).

- c:\Temp

- c:\Temp\AzureRoles

- C:\Temp\AzureRoles\BuiltinExports\

- C:\Temp\AzureRoles\CustomRoles

-

Once the folders have been created, it will Get the Azure Role definitions and export them into JSON into the BuiltinExports folder to be reviewed.

New-Item -ItemType Directory -Path c:\Temp -Force

New-Item -ItemType Directory -Path c:\Temp\AzureRoles -Force

New-Item -ItemType Directory -Path c:\Temp\AzureRoles\BuiltInExports -Force

New-Item -ItemType Directory -Path c:\Temp\AzureRoles\CustomRoles -Force

$a = Get-AzRoleDefinition

Foreach ($role in $a)

{

$name = $role.Name

Get-AzRoleDefinition -Name ($role).Name | ConvertTo-Json | Out-File c:\Temp\AzureRoles\BuiltInExports\$name.json

}

-

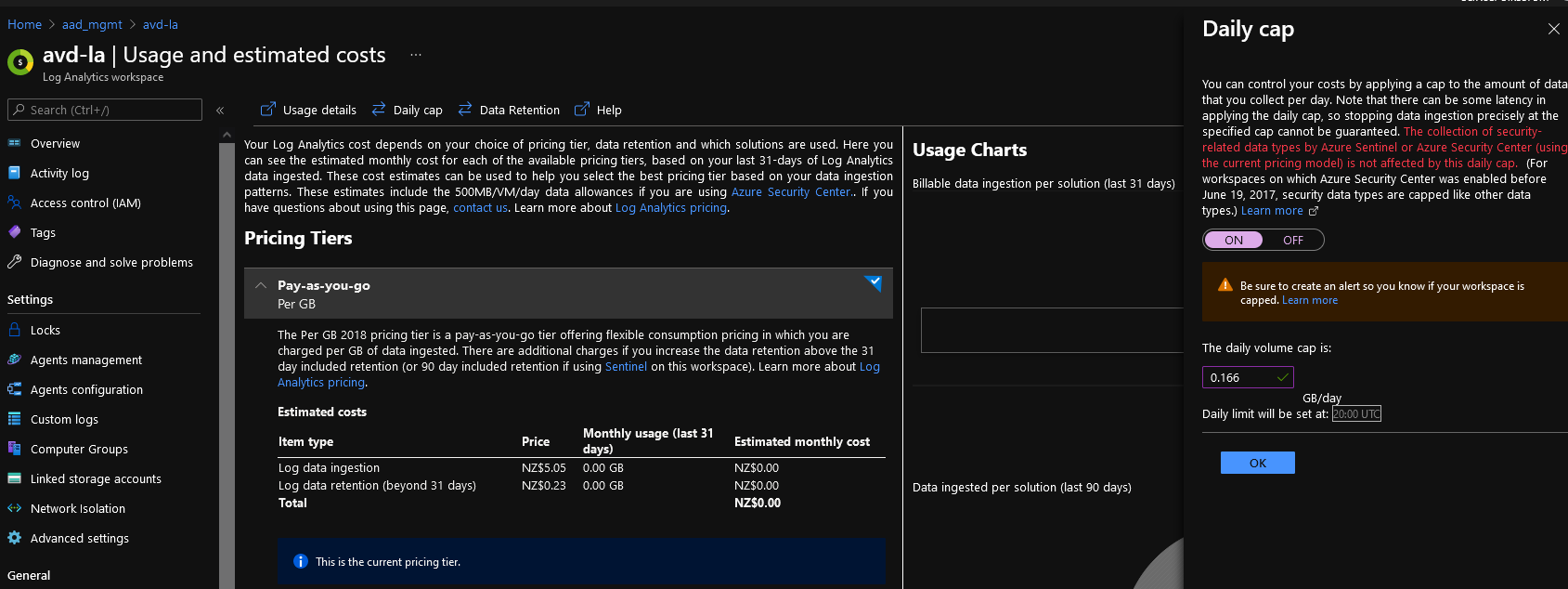

Once completed, you should now see the JSON files below:

-

Although you can use Notepad, I recommend using Visual Studio Codeto read these files. This is because Visual Studio Code will help with the syntax as well.

Review Built-in Azure Roles

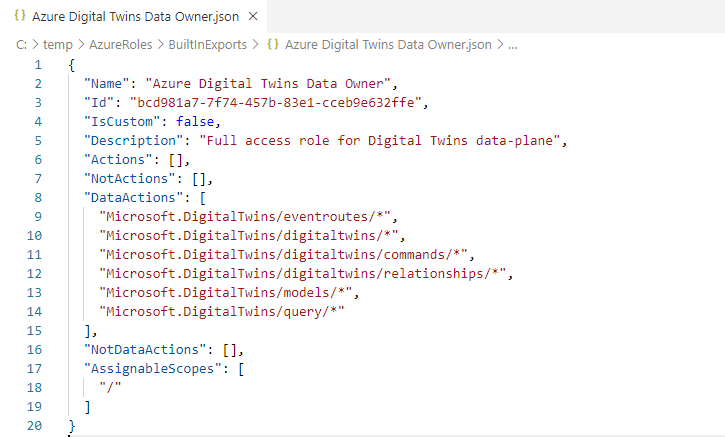

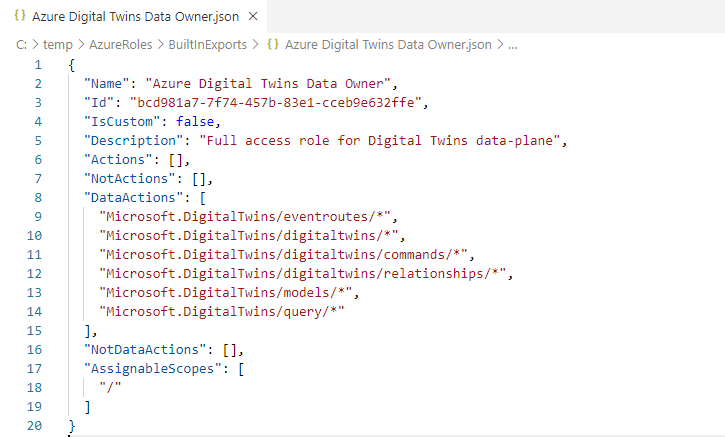

If you open one of the roles, I will open the Azure Digital Twins Data Owner role; however, it doesn't matter.

You should see the following fields:

- Name

- Id

- IsCustom

- Description

- Actions

- NotActions

- DataActions

- NotDataActions

- AssignableScopes

These fields make up your Role.

-

The Name field is pretty self-explanatory - this is the name of the Azure Role and what you see in the Azure Portal, under Access control (IAM).

-

-

The same is true for the: Description field.

These are essential fields as they should tell the users what resource or resources the role is for and what type of access is granted.

-

The IsCustom field is used to determine if the Azure Role is a custom made policy or not; any user-created Role will be set to True, while any In-Built role will be False.

-

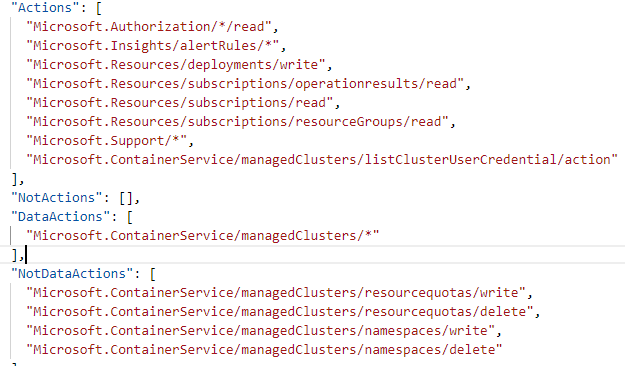

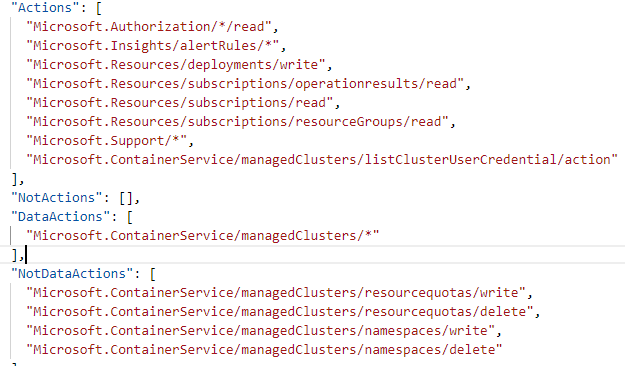

The Actions field is used to determine what management operations can be performed. However, the Azure Digital Twins role doesn't have any (as it is mainly Data Action based) if we look at another Role such as the: Azure Kubernetes Service RBAC Admin role:

- ""Microsoft.Authorization/*/read",

- "Microsoft.Insights/alertRules/*",

- "Microsoft.Resources/deployments/write",

You can see that it has the rights to Read the permissions, create and delete any Alert rules and update resources.

-

The NotActions field is used to exclude anything from the Allowed actions

-

The DataActions field allows you to determine what data operations can be performed. Usually, these are sub-resource tasks, where management or higher-level operations are performed in the Actions field, more specific resource actions are performed in the DataActions field.

The NotDataActions field is used to exclude anything from the Allowed actions in the DataActions

To help get a feel of the differences with the Actions, here is a list of Actions and DataActions for the Azure Kubernetes Service RBAC Admin role:

- And finally, the AssignableScopes is used to specify where the role will be available for assignment, whether it can be assigned at a subscription or resource group or management group level. You will notice that most if not all built-in Azure Roles have an Assignable scope of "/" - this means that it can be assigned everywhere (Subscriptions, Resource Groups, Management Groups etc.).

Review Azure Provider Namespaces

You may have noticed that each Action has a provider. In the example of a Virtual Machine, the provider is Microsoft.Compute.

-

To get a list of all current Providers, run the following command:

Get-AzProviderOperation | Select-Object ProviderNamespace -Unique

At the time of writing, there are 198 current Providers! So that's 198 providers or overall buckets of resources that has permissions over.

-

We can drill into a provider a bit further to check out current Operations:

Get-AzProviderOperation -Name Microsoft.Compute/*

-

This displays a list of all providers within the Microsoft.Compute namespace, such as (but definitely not limited to):

- Virtual machines

- Virtual Machine Scale Sets

- Locations

- Disks

- Cloud Services

-

If we wanted to drill into the Virtual Machines providers a bit more, we could filter it like:

Get-AzProviderOperation -Name Microsoft.Compute/virtualMachines/*

-

Here we can finally see the available actions, and for example, the following Action will allow you to Read the VM sizes available to a Virtual Machine:

- Operation: Microsoft.Compute/virtualMachines/vmSizes/read

- operation name: Lists Available Virtual Machine Sizes

- ProviderNamespace: Microsoft Compute

- ResourceName: Virtual Machine Size

- Description: Lists available sizes the virtual machine can be updated to

- IsDataAction : False

-

You can use the PowerShell script below to export all the Providers and their Operations to a CSV for review:

$Providers = Get-AzProviderOperation

$results = @()

ForEach ($Provider in $Providers) {

$results += [pscustomobject]@{

'Provider NameSpace' = $Provider.ProviderNamespace

Description = $Provider.Description

'Operation Name' = $Provider.OperationName

Operation = $Provider.Operation

ResourceName = $Provider.ResourceName

}

}

$results | Export-csv c:\temp\AzureRBACPermissions.csv -NoTypeInformation

Using the namespace, providers and actions, you should now be able to see the power behind Role-based access control and how granular you can get.

Add a Custom Role using PowerShell

Now that we understand how to navigate the Namespaces and Built-In Roles available in Microsoft Azure using PowerShell, now we will create one.

I have created a base template to help you start.

This base template has the following fields that the majority of most custom roles will use:

- Name

- IsCustom

- Description

- Actions

- AssignableScopes (make sure you put in the of your Azure subscription, you are assigning the role to.)

- Edit these fields (apart from IsCustom, which you should leave as True) as you need.

CustomRoleTemplate.json

{

"properties": {

"roleName": "Custom Role - Template",

"IsCustom": true,

"description": "This is a Template for creating Custom Roles.",

"assignableScopes": [

"/subscriptions/<SubscriptionID>"

],

"permissions": [

{

"actions": [

"Microsoft.Support/register/action",

"Microsoft.Support/checkNameAvailability/action",

"Microsoft.Support/operationresults/read",

"Microsoft.Support/operationsstatus/read",

"Microsoft.Support/operations/read",

"Microsoft.Support/services/read",

"Microsoft.Support/services/problemClassifications/read",

"Microsoft.Support/supportTickets/read",

"Microsoft.Support/supportTickets/write",

"Microsoft.Resources/subscriptions/resourceGroups/read",

"Microsoft.Resources/subscriptions/resourcegroups/resources/read"

],

"notActions": [],

"dataActions": [],

"notDataActions": []

}

]

}

}

This Custom Role - Template allows you to read the name of all Resource Groups in a subscription and open a Microsoft Support case.

In my example, I am going to add a new role called:

- LukeGeek-WebApp Deployment-RW

This role will allow users to Deploy and modify Azure WebApps, among other things!

LukeGeekWebDeployment-RW.json

{

"properties": {

"roleName": "Custom Role - Template",

"description": "This is a Template for creating Custom Roles.",

"IsCustom": true,

"assignableScopes": [

"/subscriptions/<SubscriptionID>"

],

"permissions": [

{

"actions": [

"Microsoft.Support/register/action",

"Microsoft.Support/checkNameAvailability/action",

"Microsoft.Support/operationresults/read",

"Microsoft.Support/operationsstatus/read",

"Microsoft.Support/operations/read",

"Microsoft.Support/services/read",

"Microsoft.Support/services/problemClassifications/read",

"Microsoft.Support/supportTickets/read",

"Microsoft.Support/supportTickets/write",

"Microsoft.Resources/subscriptions/resourceGroups/read",

"Microsoft.Resources/subscriptions/resourcegroups/resources/read"

],

"notActions": [],

"dataActions": [],

"notDataActions": []

}

]

}

}

-

To add the Custom Role to Azure, I will run the following PowerShell command:

New-AzRoleDefinition -InputFile "C:\\temp\\AzureRoles\\CustomRoles\\LukeGeek-WebApp Deployment-RW.json" -Verbose

Your new Custom Role has now been uploaded to Azure and can be selected for an assignment.

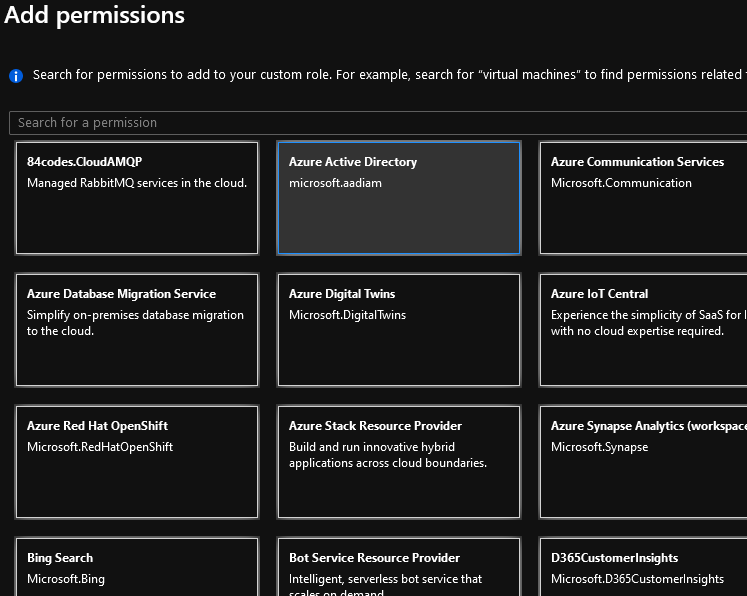

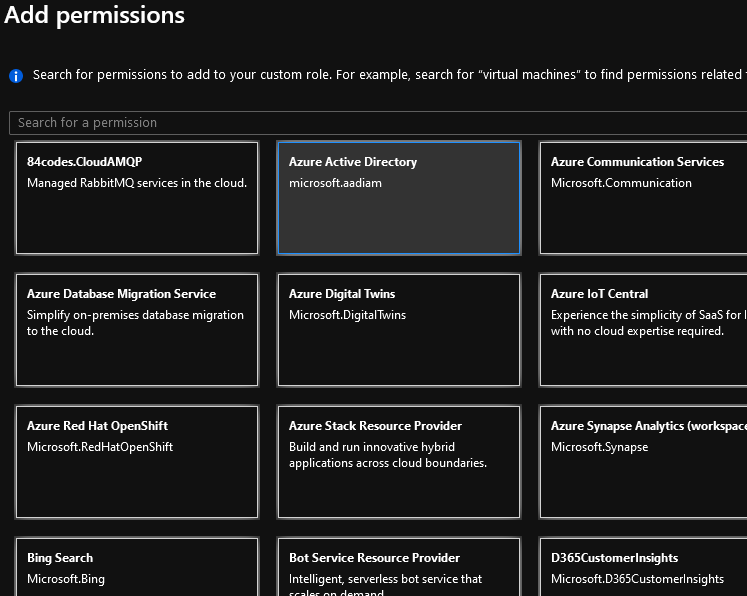

Add a Custom Role using the Azure Portal

Now that we have been through and investigated the Azure roles and their providers and actions, instead of using PowerShell to look through and create manually, you can use the Azure Portal!

Gasp! Why didn't you tell me earlier about this, Luke?

Well, fellow Azure administrator, I found it easier to look at PowerShell and JSON to explain how the Custom Roles were made, vs staring at the Azure Portal and to be honest, really just because! Like most things in IT there are multiple ways something can be done!

- Log in to the Azure Portal

- Navigate to your Subscription

- Click on Access Control (IAM) on the left-hand side blade

- Click on Add

- Click on Add Custom Role

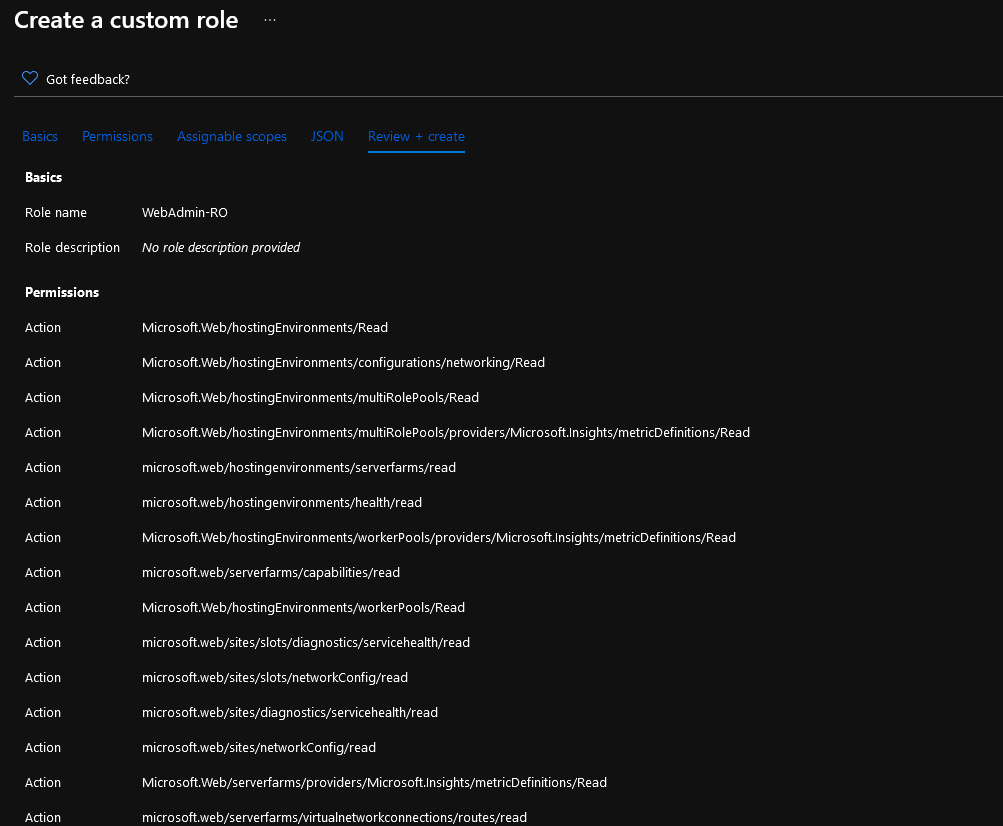

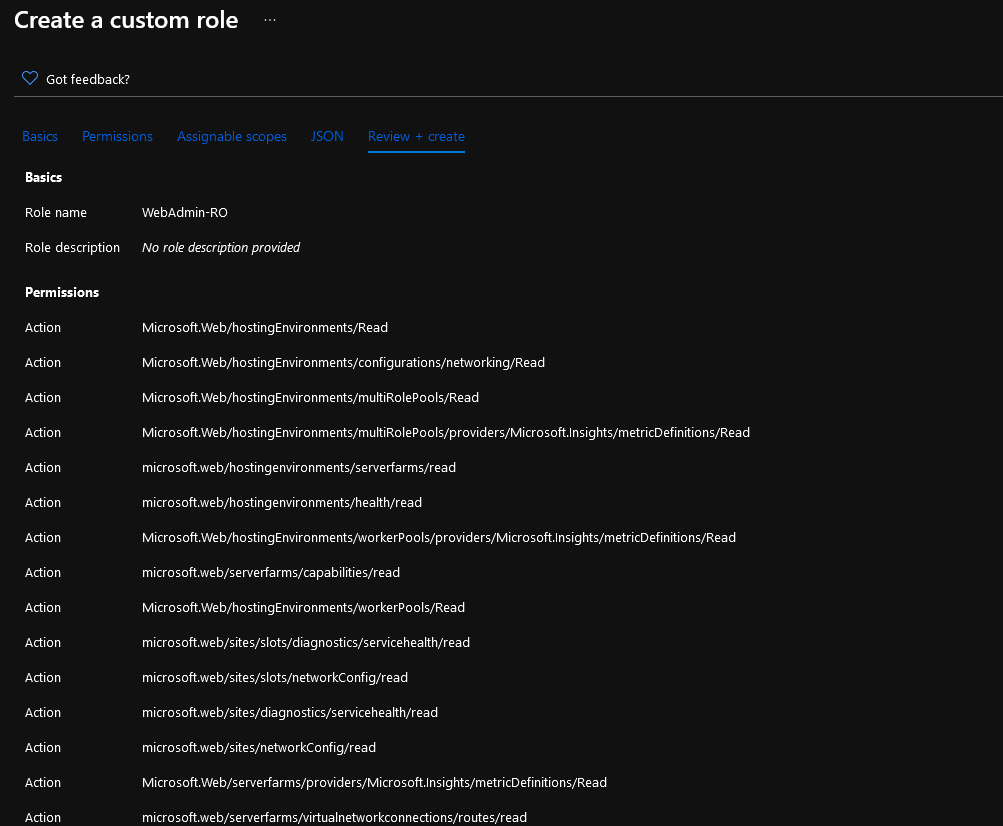

- Type in the Role Name, for example, WebAdmin-RO

- Type in a clear description so that you can remember what this role is used for in a year!

- For Baseline permissions, select: Start from Scratch

- Click Next

- Click Add Permissions

- If you want, you can select: Download all permissions to review the providers and actions (very similar to the Get-AzProviderOperation PowerShell command).

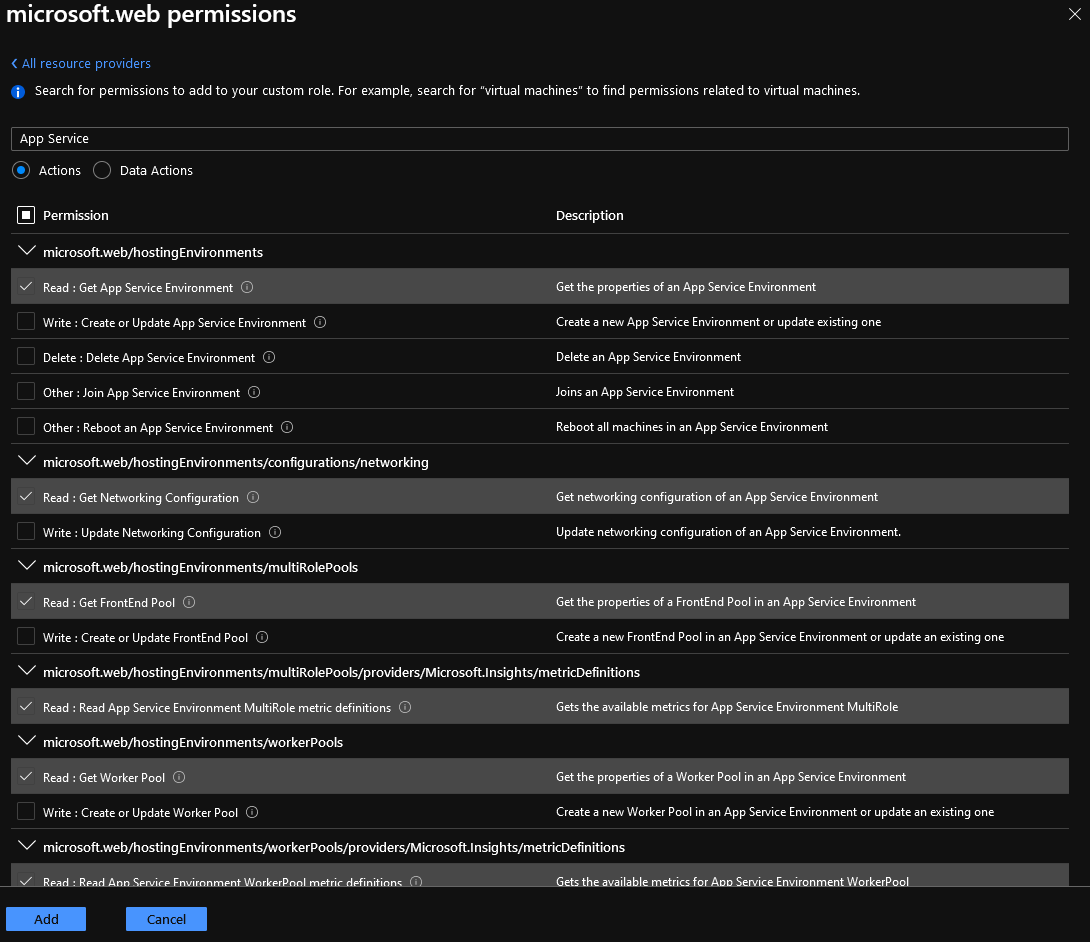

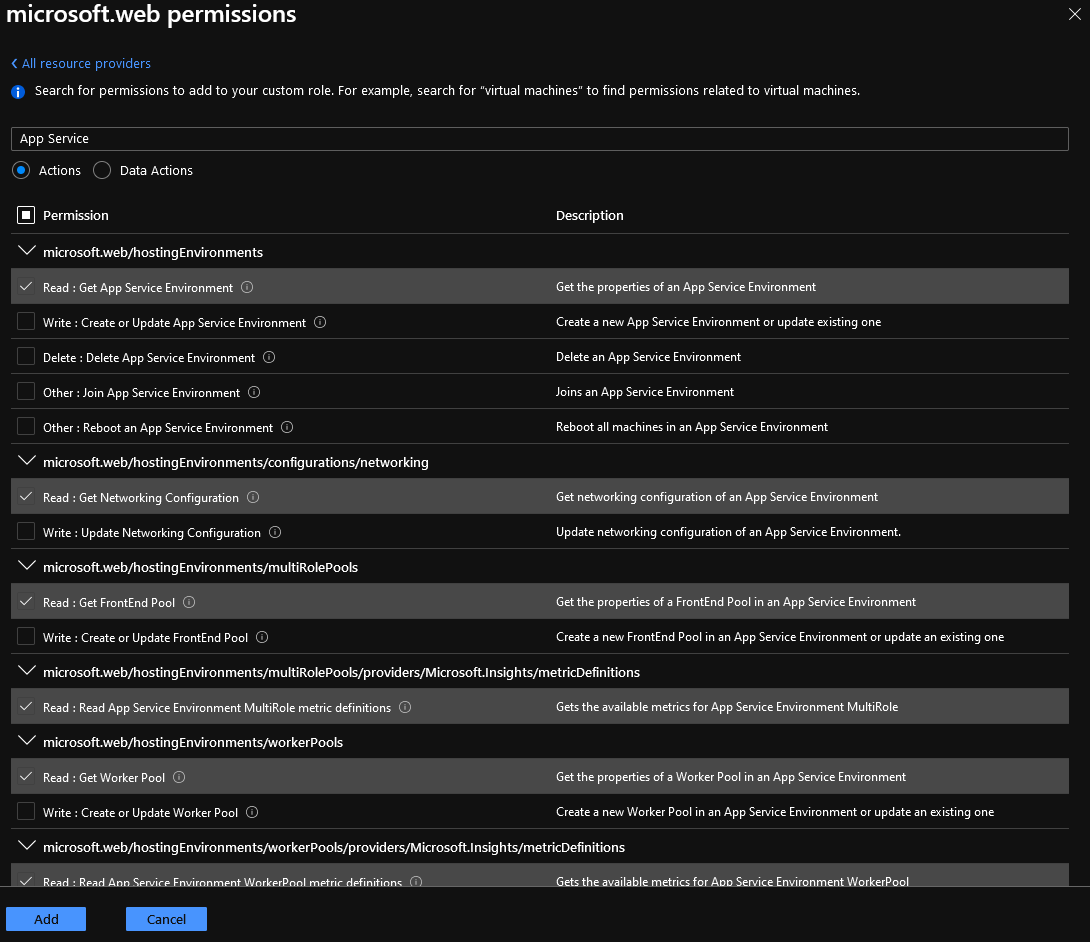

- As you should see, all the Namespace providers are listed with the Actions/Permissions that you can do.

- In my example, I am going to search for Microsoft Web Apps

- Select all 'Read' operations (remember to look at Data Actions as well, there may be resource level actions you might want to allow or exclude)

- Click Add

- Review the permissions and click Next

- Select your assignable scope (where the Role will be allowed so that you can assign it)

- Click Next

- You can review and download the JSON for backup later (this is handy if you are going to Automate the creation of roles in the future and want a base to start from)

- Click Next

- Click Create to create your Custom Role!

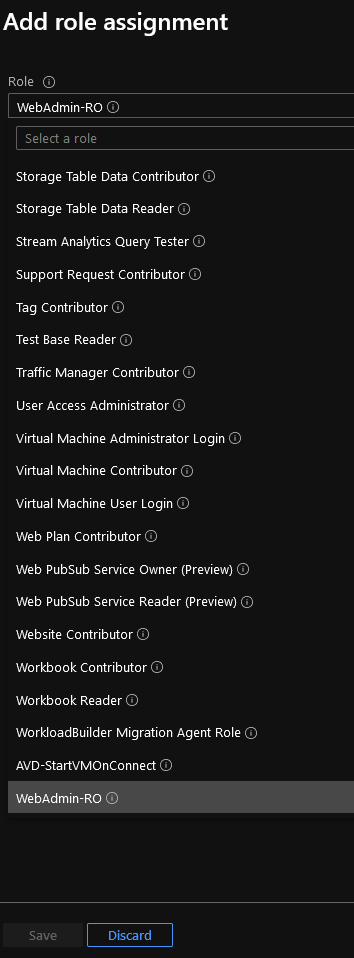

Assign a Custom Role using the Azure Portal

Now that you have created your Custom Role - it is time to assign it! So it is actually in use.

- Log in to the Azure Portal

- Navigate to your Subscription or Resource Group you want to delegate this role to

- Click on Access Control (IAM)

- Click Add

- Click on Role Assignment

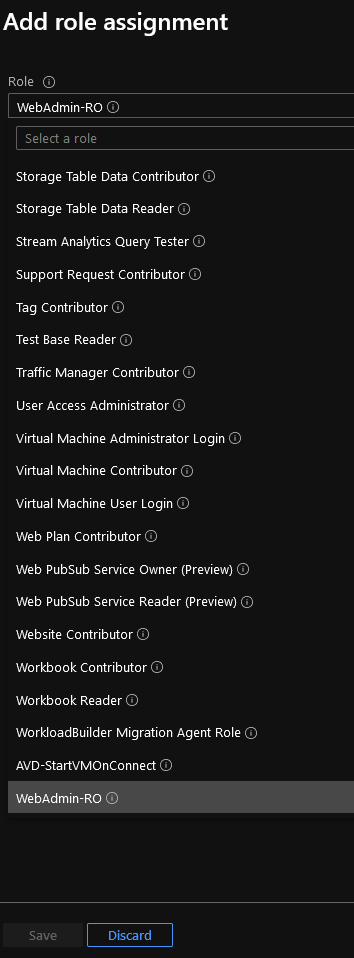

- Under the 'Role' dropdown, select your Custom Role.

- Now you can select the Azure AD Group/User or Service Principal you want to assign the role to and click Save.

- Congratulations, you have now assigned your Custom role!

Assign a Custom Role using PowerShell

You can assign Custom Role's using PowerShell. To do this, you need a few things such as the Object ID, Assignable Scope IDs etc., instead of rehashing it, this Microsoft article does an excellent job of running through the process.

2. Press 'Y' to accept PSGallery as a trusted repository; just a note, you can prevent the confirmation prompt when installing Modules from the PSGallery, by classifying it as a 'Trusted Repository' by running the following. Just be wary that won't get rechallenged: