Common Challenges and Solutions for Azure OpenAI Adoption

When considering Azure Open AI adoption, there are some common challenges that you might face. These include:

- Protecting confidential information.

- End-to-end observability.

- Disable inferencing via Azure AI Studio

- Protect from OWASP's Top 10 threats

So, let's take a look at each of these challenges and how you can overcome them.

📄 Overview

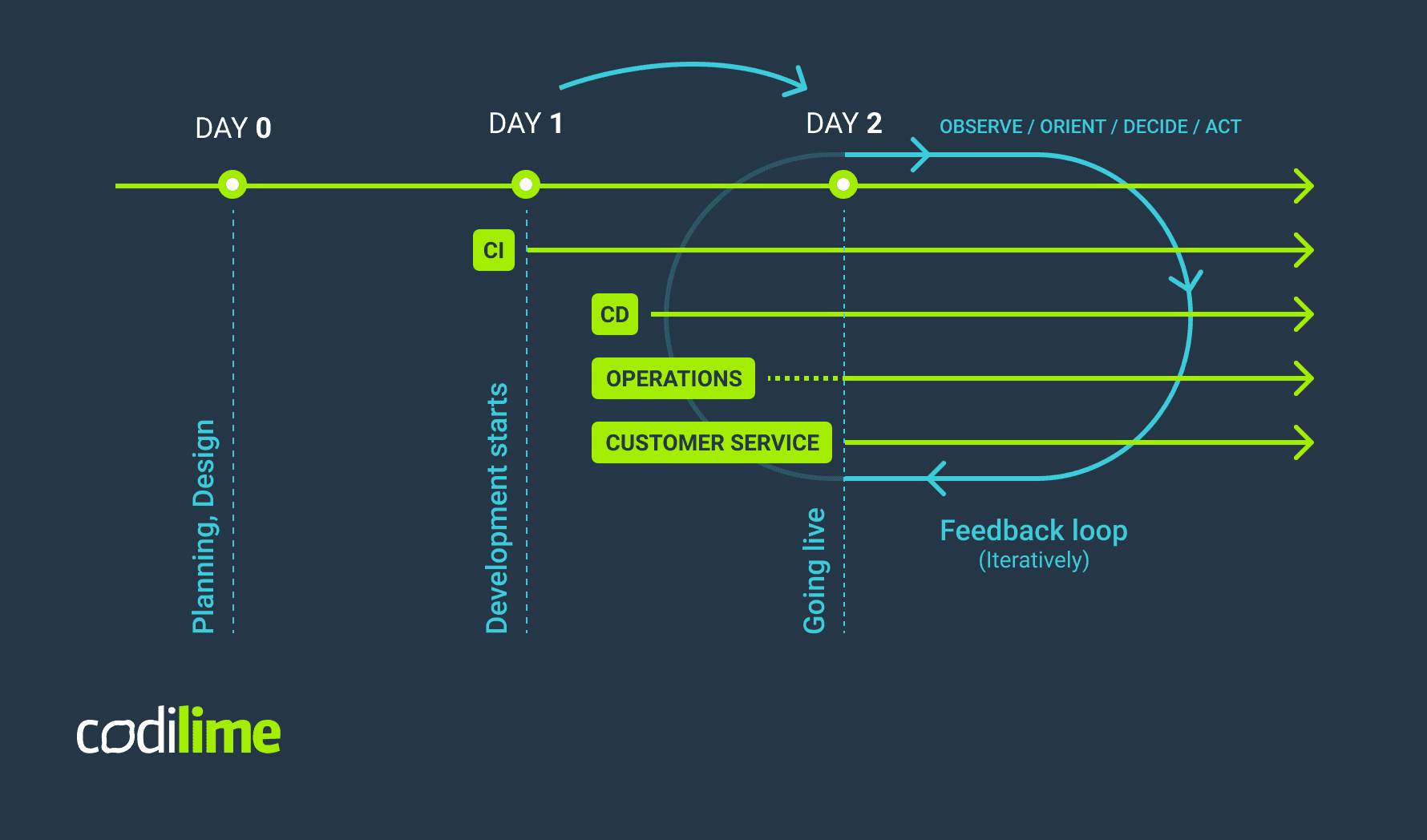

When considering Azure Open AI adoption, there are some common challenges or considerations you might face, especially when looking at your solution's Day 1 and Day 2 lifecycles.

Software life cycle stages are typically broken down into the following stages:

- Day 0 - Design and build stage

- Day 1 - Infrastructure and code deployment stage

- Day 2 - Runtime

Today, we will look at common challenges and how we can overcome, work around, or mitigate them.

🛬 Landing Zone

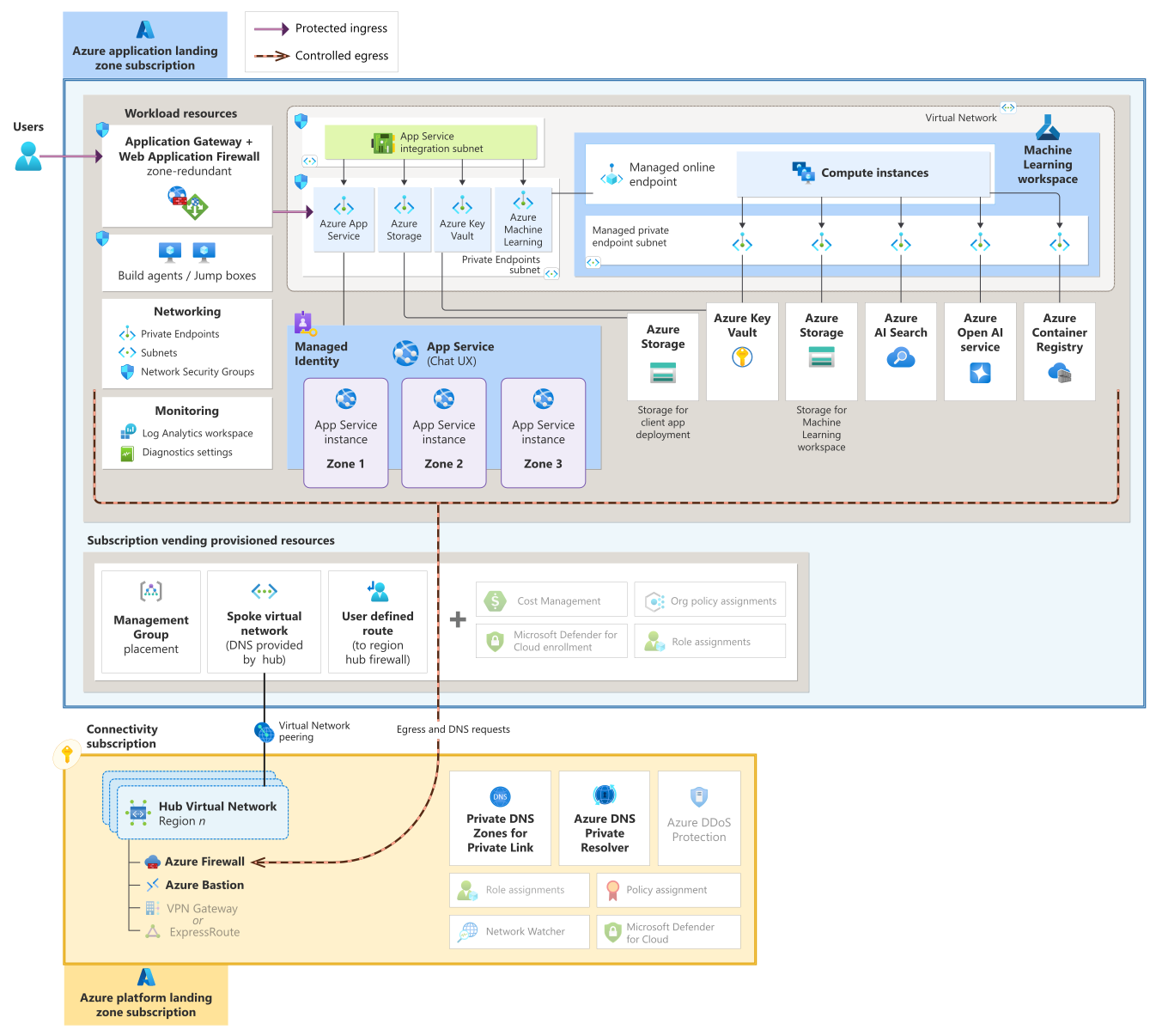

When considering Azure Open AI adoption, having a well-defined landing zone for your platform architecture and application is important.

This is where you will deploy your AI solution.

An Azure landing zone is an environment that follows key design principles across eight design areas. These design principles accommodate all application portfolios and enable application migration, modernization, and innovation at scale. An Azure landing zone uses subscriptions to isolate and scale application resources and platform resources. Subscriptions for application resources are called application landing zones, and subscriptions for platform resources are called platform landing zones.

Ask:

Establish an Azure Landing Zone to place the Azure OpenAI solution into.

Challenge:

- Ensuring a well-architected foundation

- Need for compliance and security guardrails

| Why | Risk / Impact | Solution |

|---|---|---|

| Solid foundation for cloud adoption | Risk – High; Impact – High | - Implement Azure Landing Zone - Include Identity, Security, and Network configurations - Address compliance and governance from the start - Provides a scalable and secure environment |

Rationale:

Implementing an Azure Landing Zone ensures a secure and compliant foundation for deploying Azure OpenAI solutions. Providing necessary guardrails and governance helps mitigate the high risks and impacts of cloud adoption.

Action:

Implement an Azure Landing Zone for your Azure OpenAI solution. Include Identity, Security, and Network configurations. Address compliance and governance from the start. For Platform Landing Zone recommendations, refer to the Cloud Adoption Framework - Ready.

However, for more specific Azure OpenAI application landing zone considerations, we can view the chat baseline architecture at the Azure Architecture Center for reference.

Azure Landing Zones consist of more than just a place to store your resources; they should also include considerations for people/processes and products to run your applications at scale. I highly recommend going through the Azure Landing Zone Review to ensure you have a solid foundation for your Azure OpenAI solution, and Azure workloads in general.

🔒 Protect confidential information

When considering Azure Open AI adoption, confidential information, including data, code, and other sensitive information, must be protected.

It is important to ensure that your data is protected, especially when you use the RAG (Retrieval Augmented Generation) pattern, which allows you to use your own data.

Ask:

Establish an Azure Landing Zone to place Azure OpenAI solution into

Challenge:

Ensuring a well-architected foundation Need for compliance and security guardrails

| Why | Risk / Impact | Solution |

|---|---|---|

| - Solid foundation for cloud adoption- Critical for enterprise-grade workloads- Ensures compliance and governance | Risk – HighImpact – High | - Implement Azure Landing Zone- Include Identity, Security, and Network configurations - Address compliance and governance from the start- Provides a scalable and secure environment |

Rationale:

Starting with Public and internal data reduces the Impact from high to low. With lower overall risk, an organization can have faster initial adoption by the time they build additional guardrails for governance and compliance in place (Audit, Monitoring, and enterprise architecture).

Action:

Use Platform and resource capabilities to lock down access to confidential information. For example, use Azure Key Vault to store and manage secrets, and use Azure Policy to enforce compliance of those policies.

- Make use of Infrastructure as Code (IaC), or deploy the services manually to ensure that your Azure OpenAI solution is deployed in a secure and compliant manner, vs automated methods, that may automatically create the resources you need (and sometimes more than you need) such as Azure AI Studio with Public endpoints.

- Make use of Role-based access control (RBAC) to ensure that only authorized users have access to your Azure OpenAI solution.

- Disable access keys for Storage accounts, and make sure of Managed Identities for inter-Azure resource permissions.

- Determine if the risk is high enough to manage the keys yourself using Customer Managed Keys for your Storage account.

- Make use of Private Endpoints and disable access to the public internet where possible, and monitor private endpoint (East/West) traffic, using a Network Virtual Appliance, such as Azure Firewall.

- Make use of in-built Azure policies to enforce compliance, such as Storage account public access should be disallowed.

- Make sure your data lifecycle is managed correctly, that you are not storing data longer than you need to, and that you are not storing data that you do not need to, you can make use of lifecycle management policies, to assist with the technical aspects of this, and the Cloud Adoption Framework has some guidance for Data governance.

🔍 End-to-end observability

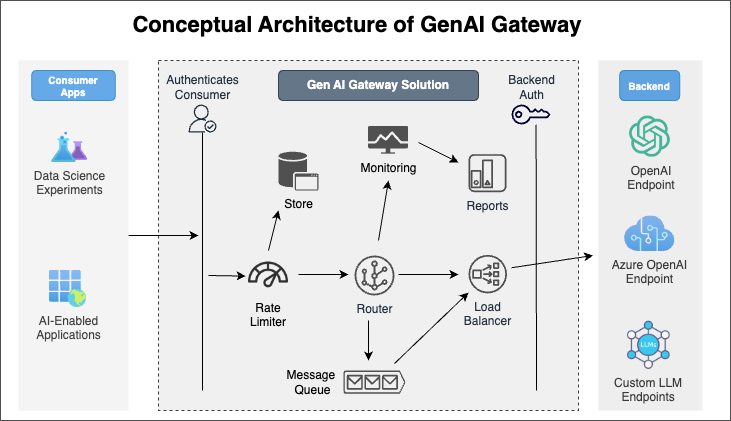

When considering Azure Open AI adoption, it is important to have end-to-end observability. This includes monitoring, logging, and tracing.

Observability for Azure Open AI has come a long way, with greater capabilities being integrated into products, such as Azure API Management features, commonly referred to as an AI Gateway.

I did a blog article on implementing and testing some of the AI Gateway capabilities a few months ago here: Implementing AI Gateway capabilities in API Management, make sure you check this out for a bit more depth, and also implementing a correlation ID for API Management requests, to help track client transactions with Azure Application Insights.

Ask:

All interactions by data scientists, including prompts & responses, must be logged.

Challenge:

Azure OpenAI only logs the consumer's user ID but does not log prompts sent or responses received in the Azure Diagnostics table (or anywhere else).

Here's how the table could be updated based on your provided details:

| Why | Risk / Impact | Solution |

|---|---|---|

| - Lines of businesses that own the data need to ensure there was no misuse of confidential data | Risk – High Impact – High | - Move complete audit trail must be established to create a fully governed environment - Custom applications that call AOAI should log prompts and responses from their side - Use Azure API Management services as a middle layer between published services and consuming applications and audience |

Rationale:

Azure OpenAI has minimal capabilities and relies on other products to capture prompts and inferences fully for audit and governance purposes.

Action:

- Use Azure API Management to capture all interactions between your Azure OpenAI solution and your consumers.

- Use Azure Application Insights to capture all interactions between your Azure OpenAI solution and your consumers. Application Insights works well with API Management.

- Make sure of reference architecture, such as the Implement logging and monitoring for Azure OpenAI models for guidance on how to implement this, and to review what metrics and logs can be pulled from Azure OpenAI logging by default and adding the API Management resource into the solution.

- Make sure of the AI-Gateway labs, to help add additional capabilities to your Azure API Management instance, tailored for Azure OpenAI endpoints.

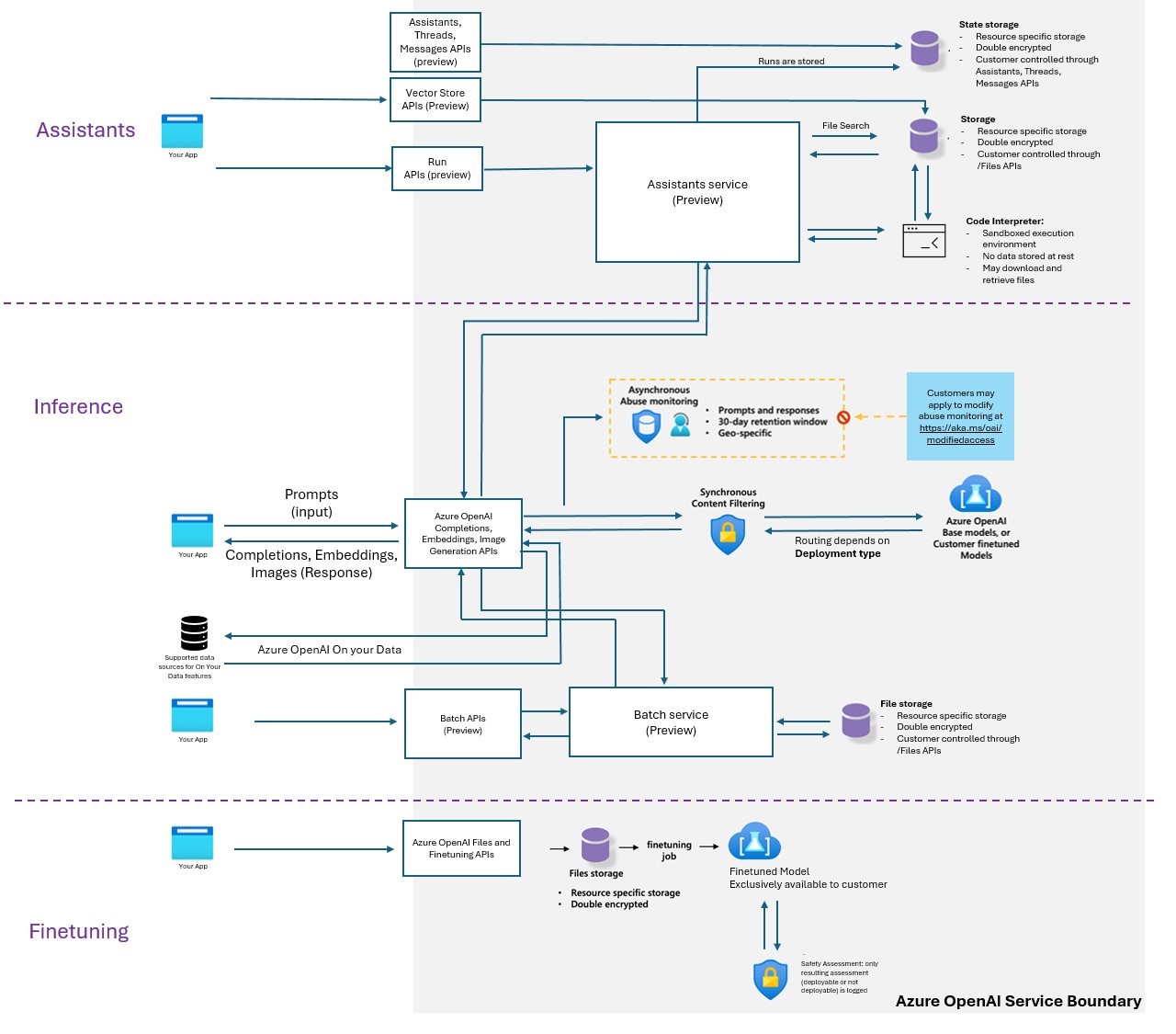

🚫 Microsoft to not monitor customer data

Prompts and responses are stored by Microsoft by default for abuse monitoring (not accessible to customers). You may not want Microsoft to have access to this data, including Microsoft support.

Your prompts (inputs) and completions (outputs), your embeddings, and your training data:

Are NOT available to other customers. Are NOT available to OpenAI. Are NOT used to improve OpenAI models. Are NOT used to train, retrain, or improve Azure OpenAI Service foundation models. Are NOT used to improve any Microsoft or 3rd party products or services without your permission or instruction. Your fine-tuned Azure OpenAI models are available exclusively for your use. Microsoft operates the Azure OpenAI Service as an Azure service. Microsoft hosts the OpenAI models in its Azure environment, and the Service does NOT interact with any services operated by OpenAI (e.g., ChatGPT or the OpenAI API).

Reference: Data, privacy, and security for Azure OpenAI Service

Ask:

Confidential data should never be stored anywhere by Microsoft or viewed by any Microsoft support or product team member.

Challenge:

Microsoft logs all prompts for internal abuse monitoring for 30 days and then deletes them.

| Why | Risk / Impact | Solution |

|---|---|---|

| Some clients do not want any confidential data in prompts to be viewed by Microsoft support team | Risk / Impact: High | Managed customers can apply for modified access, which stops all prompt logging |

Rationale:

Microsoft’s managed customers are typically large organizations that trust Microsoft and are deemed responsible for their actions.

Action:

- Managed customers may apply for modified access, which stops all prompt logging.

- Make use of Security filters in Azure AI Search, for limiting the data that is returned to the user.

Managed partners and customers are customers whose subscriptions are managed by the partner network or customers who are part of the Microsoft Enterprise Agreement program. Managed customers can apply for modified access, which stops all prompt logging. This is a contractual agreement between Microsoft and the customer, and the customer must apply for this access.

Also, make sure you talk to your account team and understand the implications of this, as it may affect the way you have architected the solution, the safeguards you may have to add, and your expectations with Microsoft support regarding any data that they may have.

"Disabling content filtering could fail to block content that violates the Microsoft Generative AI Services Code of Conduct. My organization will implement systems and measures to ensure that the organization’s use of Azure OpenAI complies with the Microsoft Generative AI Services Code of Conduct".

Make sure you take a look at the Use Risks & Safety monitoring in Azure OpenAI Studio functionality, to review any results of the filtering activity. You can use that information to further adjust your filter configuration to serve your specific business needs and Responsible AI principles.

⚙️ Disable inferencing via Azure AI Studio

When considering Azure Open AI adoption, it is important to consider if you want users, to be able to inference using Azure AI Studio; using Azure AI Studio can be a security risk, as it allows users to inference directly from the Azure Portal, bypassing some traceability mechanisms (such as Azure API Management) which you may have in place.

Ask:

Azure OpenAI Studio should not be available to make any inferencing calls and must be disabled.

Challenge: There is no permission or RBAC role that prevents the use of AI Studio.

Here’s the table with all the missing information added:

| Why | Risk / Impact | Solution |

|---|---|---|

| Azure OpenAI Studio does not log prompts and responses in the diagnostic logs | Risk – High/Impact – High | - Implement custom logging at the client side to capture relevant details. - Use alternative logging mechanisms to track prompts and responses securely. |

| Azure OpenAI Studio will bypass APIM | Risk – High Impact – High | - Create an intermediary hop between the client and AOAI service and allow AOAI access only via Private Endpoint (e.g., AppGW/APIM). - Enable end user only via Service Principals (no portal access). - DNS block oai.azure.com. |

Rationale:

No other out-of-the-box method exists to disable Azure OpenAI Studio.

Action:

- Control access to individual Azure OpenAI instances through restricting what Virtual Networks can access the OpenAI instance (Service Tags need to be allowed through a Network Security Group), so make sure you make use of Network Security Groups where possible to only tunnel your approved traffic (i.e., AppGw or APIM only).

- Make use of Role-based access control for Azure OpenAI Service to control who can see what.

- Make sure of Azure Policy built-in policy definitions for Azure AI services to control network access to your Azure OpenAI instances for existing or new deployments.

- Deploy a Custom policy to prevent the creation of Azure OpenAI Studio. Refer to my blog post: Azure Policy - Deny the creation of Azure OpenAI Studio.

🚷 Restrict models to only certain users

When considering Azure Open AI adoption, it is essential to restrict models (or deployments) to only certain users.

Ask:

The model should be accessible to only the required audience and no one else.

Challenge:

Azure OpenAI does not have the ability to grant permissions by models.

Here’s the table with Risk and Impact on the same row as requested:

| Why | Risk / Impact | Solution |

|---|---|---|

| Model and data access should follow the principle of least privilege | Risk – High, Impact – High | - Each use case should have its own Azure OpenAI instance. - Deploy approved models for use cases only. - Segregate duties of model deployment and model consumption via a custom RBAC role with the least privilege. |

Rationale:

Having segregated instances for each use case eliminates the risk of model misuse and data exposure. New models and their inferencing capabilities need to be explicitly approved per use case before they can be leveraged.

Action:

- Create and deploy an Azure OpenAI Service resource for each use case, and deploy only approved models for that use case.

- Make sure of Role-based access control for Azure OpenAI Service to control who can see what, separate the users deploying the Models, to the users consuming the models, for example Cognitive Services OpenAI User to a Managed Identity or user using the deployments.

- Make use of Azure Policy built-in policy definitions for Azure AI services, to control network access to your Azure OpenAI instances for existing or new deployments, and also restrict the creation of new 'unapproved' Azure OpenAI resources from being created.

💰 FinOps - view total opex of OpenAI

Consumption per use case could vary by order of magnitude. You may want to have visibility of costs at a service or deployment level, especially for use cases where the Azure OpenAI resource is shared but deployments are spread between projects.

Ask:

Use cases must be able to view their total spend for the Azure OpenAI service

Challenge:

- Cost metrics are available at the service level and select deployments, but not all

- Any sharing of Azure OpenAI service makes cost determination not possible

- No ability to stop usage beyond spending budget

| Why | Risk | Solution |

|---|---|---|

| - Use case owners need to monitor consumption as they are responsible for cost- Azure OpenAI service can create significant costs depending on usage. | Risk – Medium Impact – High | - Provision each use case in a dedicated instance and a dedicated subscription & resource group, this also ensures full AOAI capacity (Tokens per min) available to use case |

Rationale:

- By having segregated instances for each use case, it provides accurate cost for each use case.

Sharing an instance of Azure OpenAI among multiple tenants can also lead to a Noisy Neighbor problem. It can cause higher latency for some tenants. You also need to make your application code multitenancy-aware. For example, if you want to charge your customers for the consumption cost of a shared Azure OpenAI instance, implement the logic to keep track of the total number of tokens for each tenant in your application.

Action:

- Provision dedicated instances for each use case and rely on distinct Resource Groups and Subscriptions to separate costs.

- Review Cost Management and scope the cost per Model tokens.

- Make use of API Management, and Token Usage and merge that with your billing data.

- If the Azure OpenAI instance is part of a wider solution, make sure you tag it with the cm-resource-parent tag to allow full visibility of the workload's cost.

- Understand your throughput requirements and investigate PTU (provisioned throughput units). You can leverage Azure AI Studio for calculate PTUs.

- Review Multitenancy and Azure OpenAI Service architecture considerations.

You don't pay for PER Azure OpenAI resource; you pay for the tokens consumed, so make sure you understand the pricing model. Why overcomplicate your solution with a shared resource when you can have a dedicated resource for each use case and have a clear understanding of the costs (and security) associated with that use case?

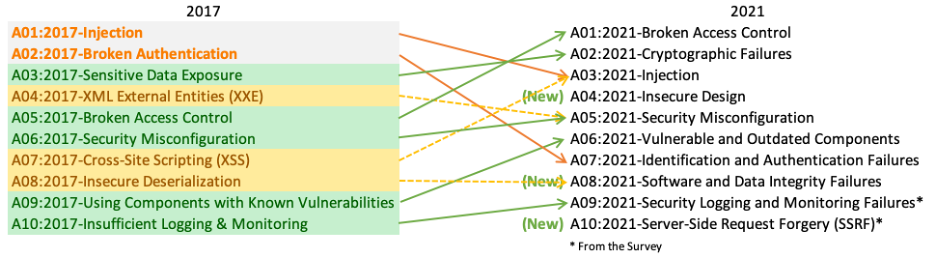

🛡️ Protect from OWASP's Top 10 threats

When considering Azure Open AI adoption, it is important to protect from OWASP Top 10 threats.

Ask:

Service should be protected from OWASP-10 threats.

Challenge:

Azure OpenAI Service is an API-based service vulnerable to OWASP-10 threats. Web Application Firewalls, which are typically used for this, can block significant legitimate AOAI inferencing calls.

| Why | Risk | Solution |

|---|---|---|

| - Per the Microsoft shared responsibility model, protection from OWASP-10 threats is customer responsibility | Risk – High Impact – High | - Disable public access to service - Limit exposure to internal traffic only |

| - Disable file uploads to service via RBAC | ||

| - Add AppGW with WAF v2 in front of AOAI (Not recommended) |

Rationale:

- Limiting internal traffic limits the exposure

- Even if an attack is successful from the internal network, there is no data stored in the service to be compromised

Action:

- Control access to individual Azure OpenAI instances through restricting what Virtual Networks can access the OpenAI instance (Service Tags need to be allowed through a Network Security Group), so make sure you make use of Network Security Groups where possible to only tunnel your approved traffic (ie, AppGw or APIM only).

- Make use of Role-based access control for Azure OpenAI Service to control who can see what.

- Make sure of Azure Policy built-in policy definitions for Azure AI services to control network access to your Azure OpenAI instances for existing or new deployments, and disable public endpoint access.

- Make use of Azure API Management and Azure Application Gateway to block, prevent, and log attempted connections to the solution.

🔑 Must use Customer Managed Keys (CMK)

All encryption must use Customer Managed Keys. I have not personally seen this requirement yet, but it is valid, especially for highly sensitive data and industry requirements (such as Finance).

Ask:

- Encryption in Azure OpenAI must be configured to use Customer Managed Keys (CMK).

Challenge:

- CMK needs to be stored in a KeyVault (in the same region).

- AOAI service connection to KeyVault can be over 'Trusted Microsoft Connection' only, and not via KeyVault's private endpoint.

| Why | Risk | Solution |

|---|---|---|

| Per policy, clients typically want to use CMK as opposed to MMK (Microsoft Managed Keys) - CMK configuration will be required to stay compliant with Azure policy | Risk – High Impact – High | - Avoid need for uploading files to Azure OpenAI studio and fine-tuning - Disable public access of KeyVault |

Rationale:

Trusted Microsoft connections use the Microsoft backbone network and no public network for communication.

Action:

- Azure AI Services support encryption of data at rest by either enabling customer-managed keys (CMK) or by enabling customers to bring their own storage (BYOS). By default, Azure AI services use Microsoft-managed keys to encrypt the data. With Customer-managed keys for encryption, customers now have the choice of encrypting the data at rest with an encryption key, managed by the customers, using Azure Key Vault. There is an additional cost, as you are charged for the Key Vault and additional dependant services, as using Customer Managed Keys brings resources (usually managed by Microsoft in a separate siloed subscription) into your own subscription in a separate managed Resource Group.

When considering customer-managed keys, consider People, Processes, and Products. You should have processes in place for the key lifecycles (rotation, revocation, etc). Also, consider the implications of the additional costs associated with this and the additional management overhead. Be pragmatic about using Customer-Managed Keys and understand the implications of using them.

❌ Must not use shareable access controls

Shareable access controls are considered less secure.

Ask:

- No use of passwords or access keys to access the Azure OpenAI service.

Challenge:

- The use of keys is typically less secure and unacceptable in the long term, although it typically increases the pace of initial adoption. This makes the use of Service Principals also less secure.

| Why - Shareable access details | Risk Risk – | Solution - Use RBAC for access |

|---|---|---|

| can be transferred to unapproved consumers, losing audit trail - Bearer token of authenticated user can be potentially misused | High Impact – High | control to service for end users as well as applications (consumer and middleware) |

| - Avoid using the RBAC role with ListKey permissions or use a custom RBAC role |

Rationale:

RBAC (Role-based Access Control) use increases the security score.

Action:

- Make use of Role-based access control for Azure OpenAI Service to control who can see what, and make sure you are not using the ListKey permission or a custom RBAC role that allows or disallows access to the keys (Microsoft.Storage/storageAccounts/listKeys/action).

🌍 Resource must be in a specific region only

Azure OpenAI resources must be in a specific region only. This is a common requirement for data sovereignty and compliance reasons.

Ask:

Provision Azure OpenAI service in the same region where Express Route terminates

Challenge:

Azure OpenAI and model capacity are highly variable, and typically may not be available in the same region as customers Express Route region.

Rationale:

Azure OpenAI Private Endpoints can be created across regions and no VNet is required for operation.

| Why | Risk | Solution |

|---|---|---|

| Network traffic is monitored for all ingress & egress in a predetermined region | Risk – High Impact – High | - Enable all regions in policy within a geography acceptable to the client |

| At times there are regulatory compliance requirements to be in specific geographies | - Provision Azure OpenAI service where capacity is available in allowed regions | |

| - Create private endpoint in Express Route region |

Rationale:

Azure OpenAI Private Endpoints can be created across regions and no VNet is required for operation

- Azure OpenAI in any region can have a Private Endpoint connected to a VNET (Virtual Network) in a different region.

- Make sure of Azure Policy, such as the Restrict resource regions policy, to enforce the region of the Azure OpenAI resource.

- Consider model deployment types (ie Global vs Regional) - remember that your model inference may be in a different region to your actual Azure OpenAI resource, if Global - so need to be considered from a latency and workload variance perspective, however, these are Read Only - so data resiliency should not be a factor, as you can still store your own data in the region of your choice.