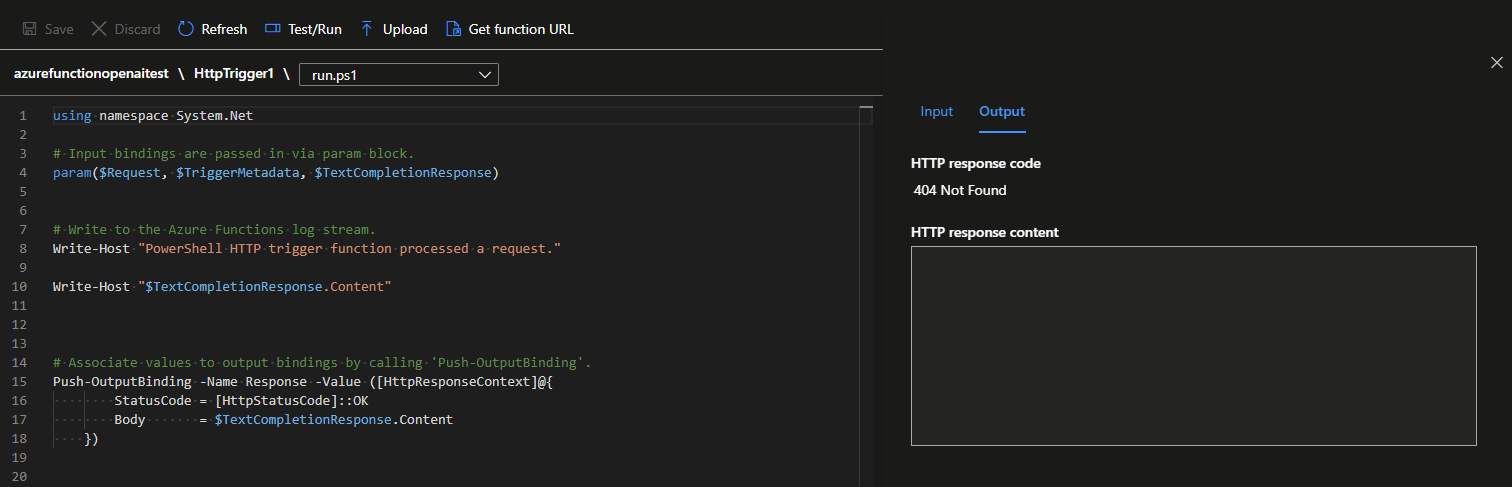

Resolving 404 Error with OpenAI Azure Function Binding

When attempting to work with Azure Open AI, you may want to work with an Azure Functions to adjust or massage the response, to do so you can use the Azure Functions bindings for OpenAI's GPT engine to query the OpenAI API. However, you may encounter a 404 Not Found error when attempting to query OpenAI using the Azure Function binding.