This article is part of Azure Back to School - 2023 event! Make sure to check out the fantastic content created by the community!

Along with the basics of the Azure Backup solutions, particularly on Virtual Machines running on Microsoft Azure, there have been a lot of changes in the last year, including Immutable vaults, enhanced policies, intelligence tiering, and cross-region restore.

Introduction

Let us start with the basics with a user story; what do we need to achieve:

"As a Cloud Infrastructure Administrator at Contoso, I want to implement an automated backup solution for virtual machines (Windows and Linux) hosted in Microsoft Azure,

So that I can ensure data reliability, disaster recovery, and compliance with minimal manual intervention."

With some assumptions around further requirements, we can jump into solutions using native Microsoft Azure services to fulfil the Cloud Administrator's need.

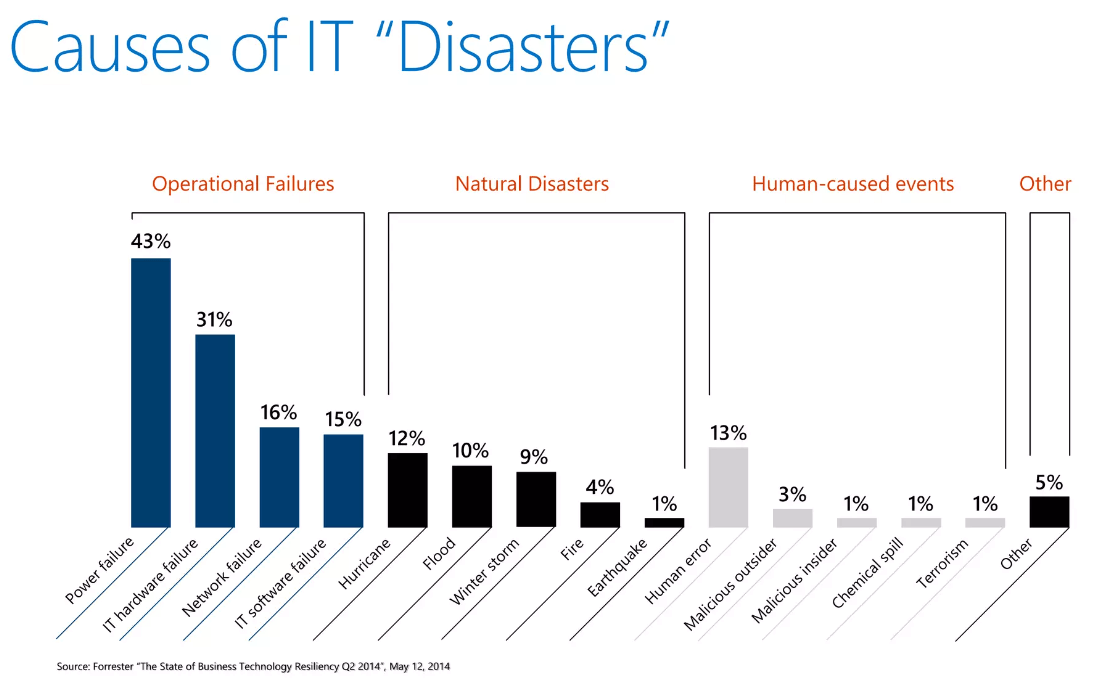

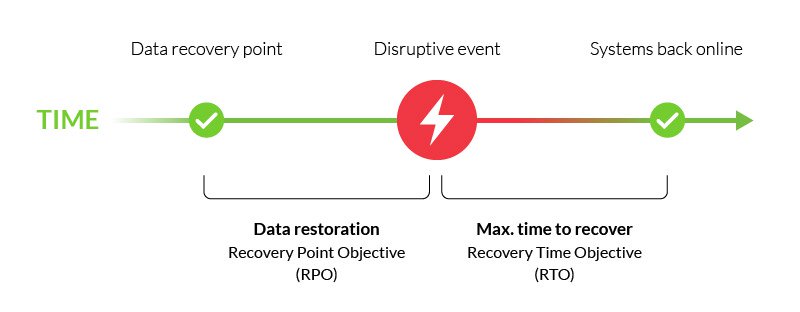

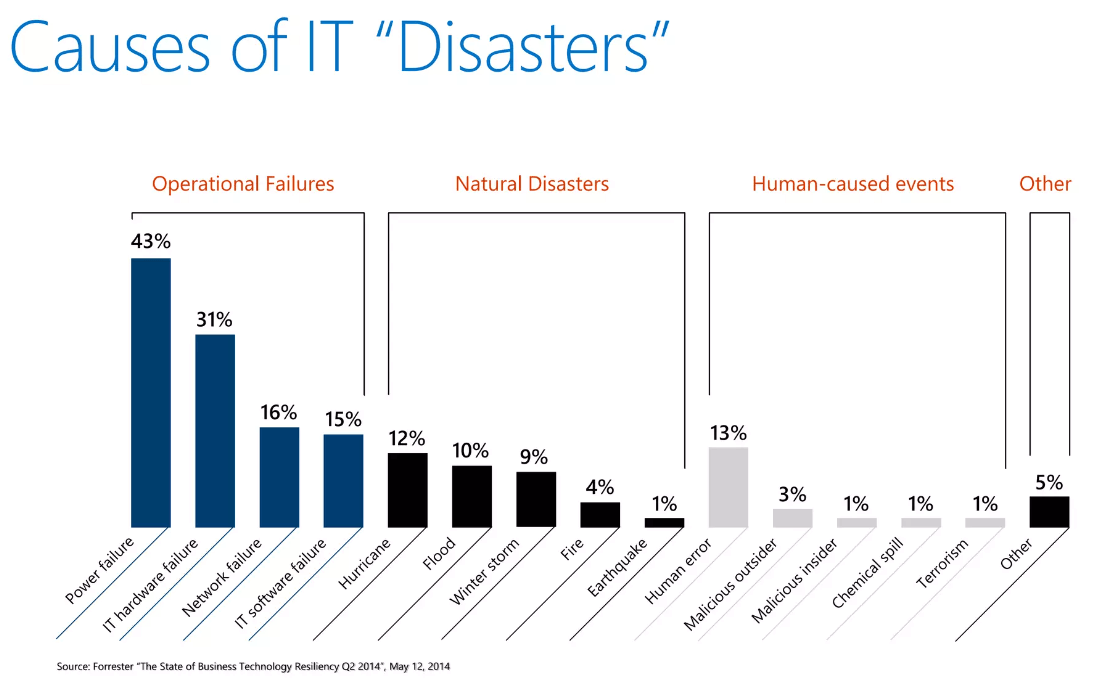

It is worth mentioning, especially around disaster recovery, that there is much more you can (and should do) do around mission-critical Azure architecture. This article will focus primarily on the data loss portion of disaster recovery with Azure Backup services.

Note: Azure DevOps - Boards are outside of the scope of this article; the main reflection here is to make sure that your decisions and designs are documented in line with business requirements.

There are also some further assumptions we will make, particularly around security and RTO requirements for the organisation of Contoso.

We know to fulfil the requirements, we need to implement the following:

So, let us take our notebooks and look at the Backup sections.

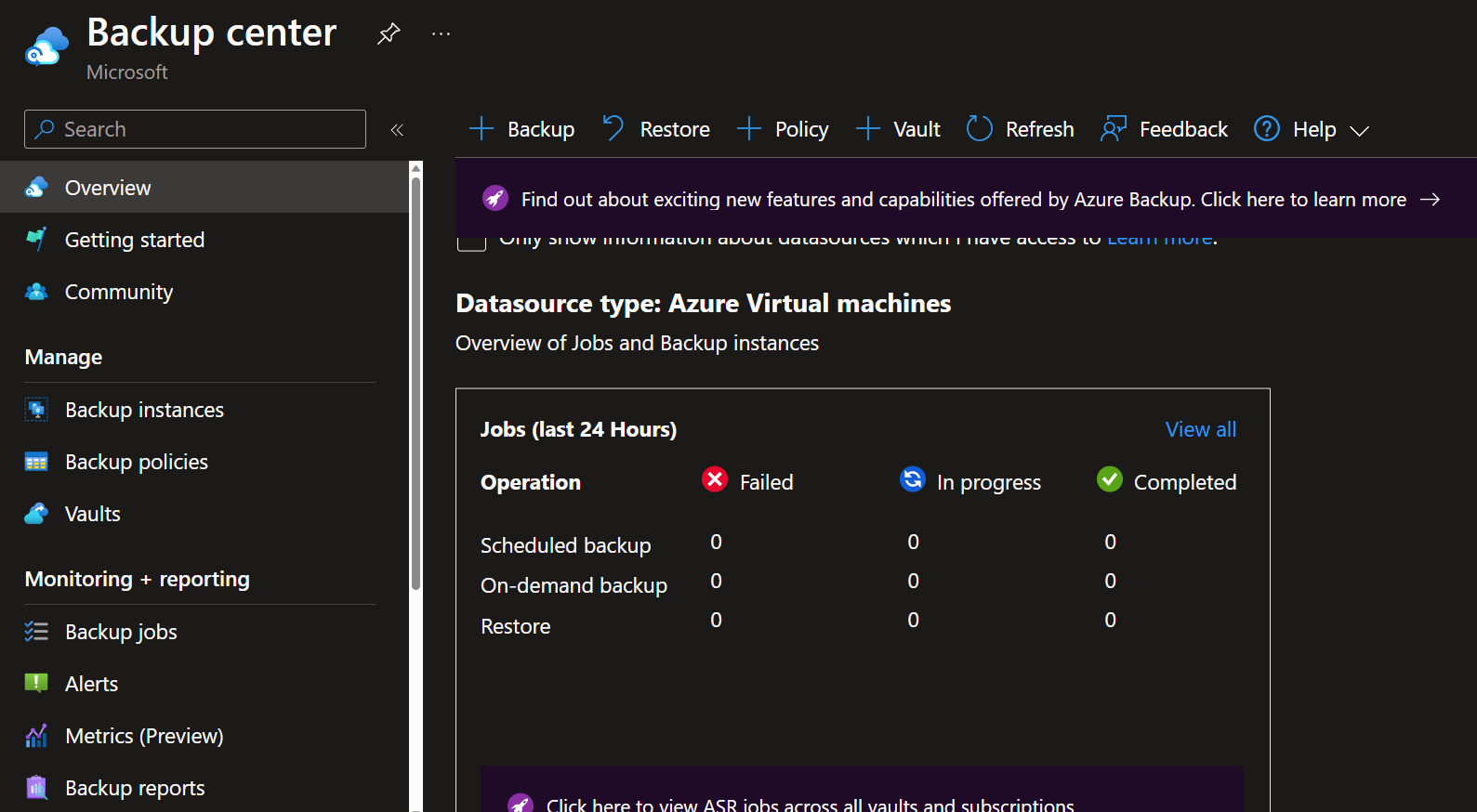

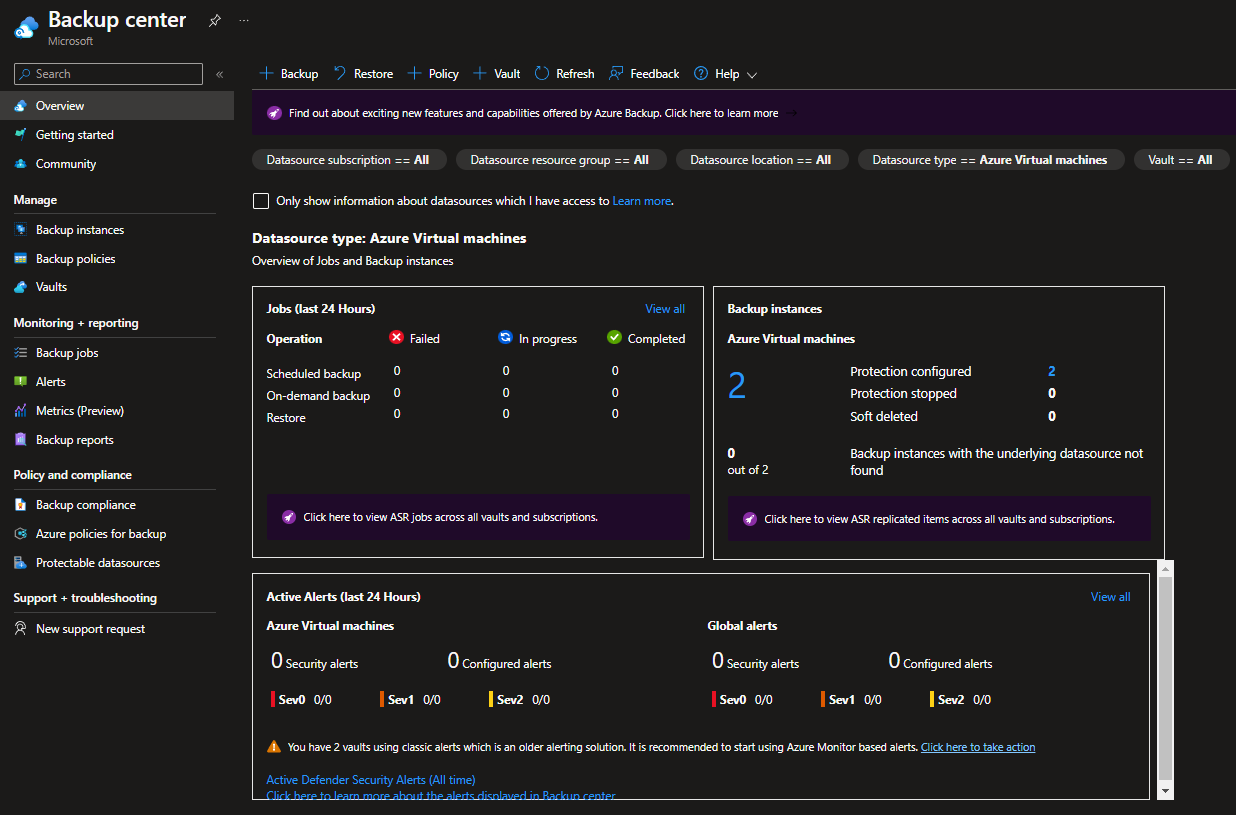

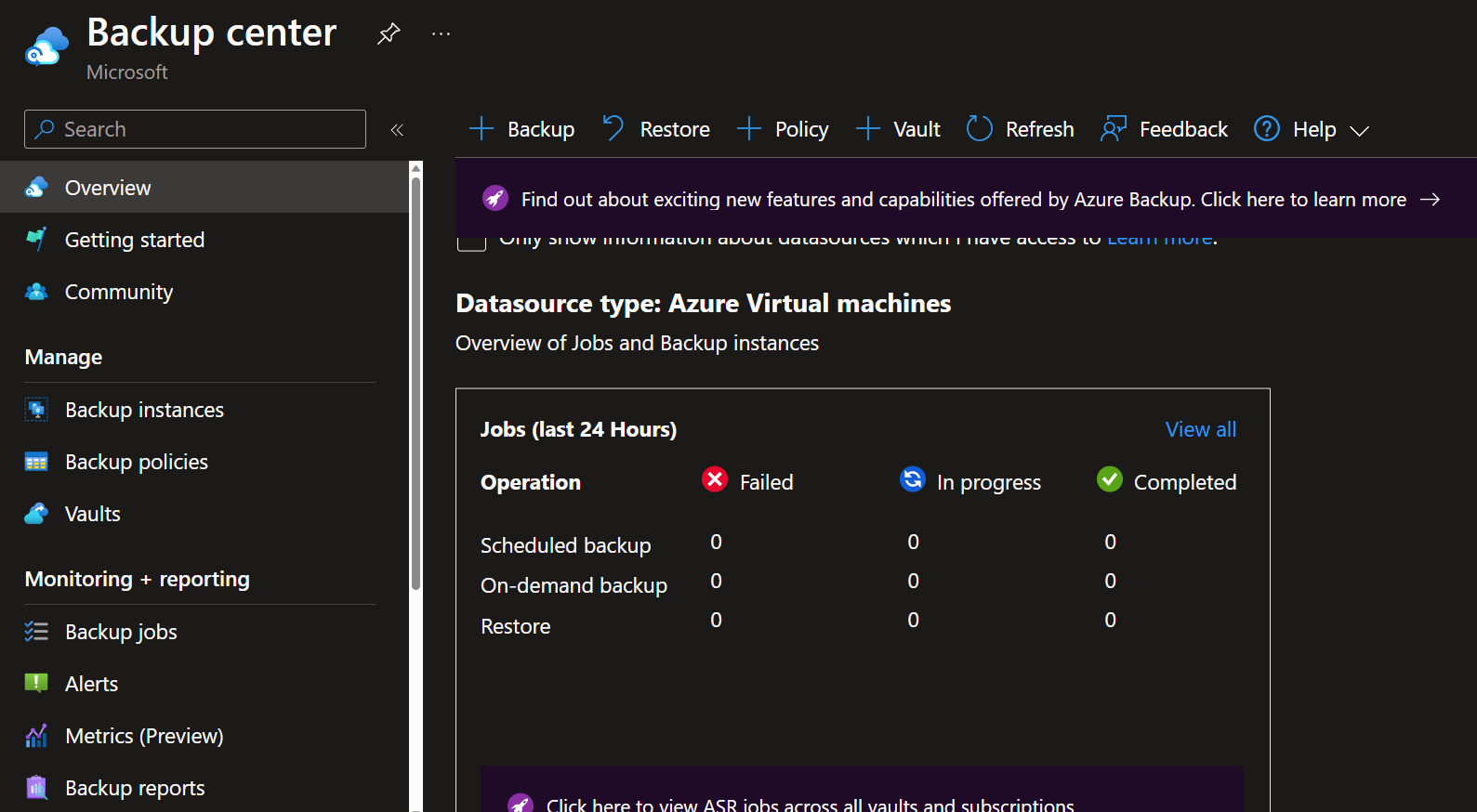

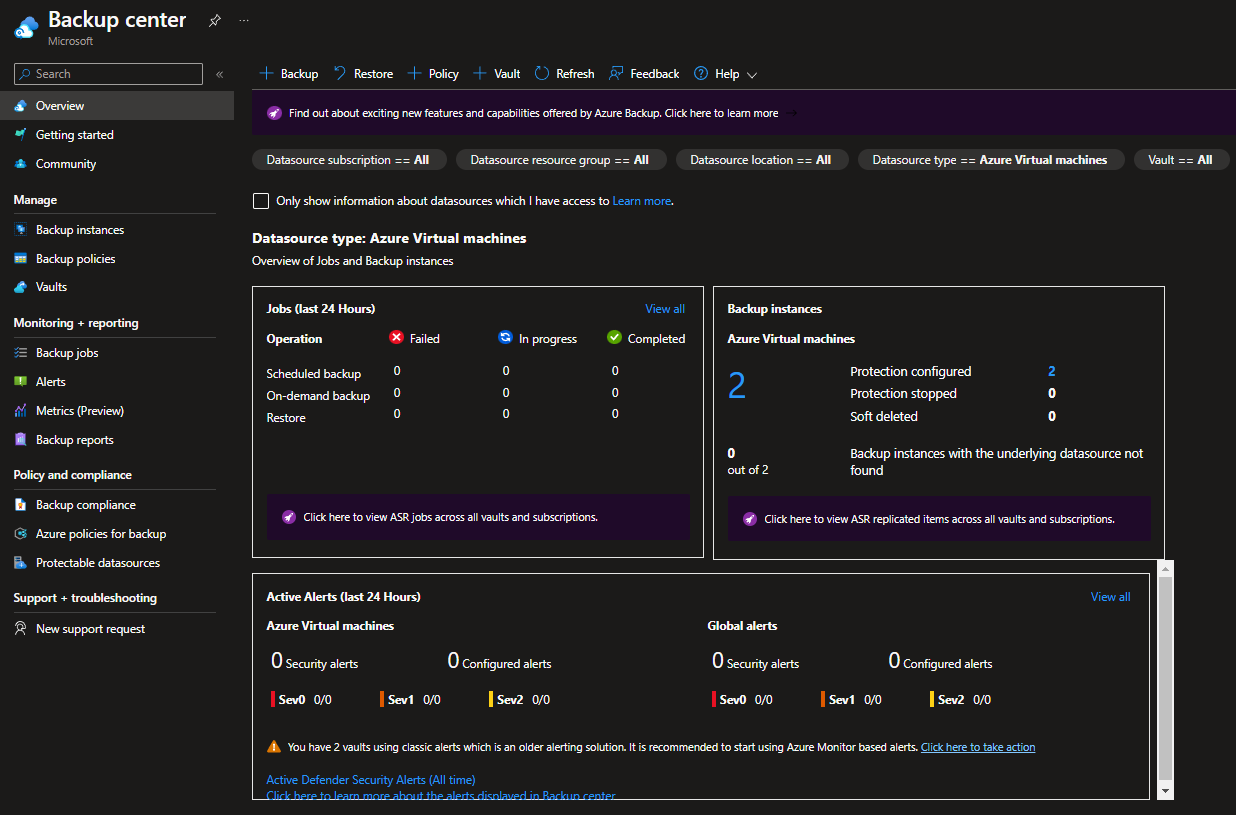

Backup Center

When needing a single control plane for your Backups across multiple tenancies (using Azure Lighthouse), Subscriptions and regions, then Backup Center is the place to start with.

"Backup center provides a single unified management experience in Azure for enterprises to govern, monitor, operate, and analyze backups at scale. It also provides at-scale monitoring and management capabilities for Azure Site Recovery. So, it's consistent with Azure's native management experiences. Backup center is designed to function well across a large and distributed Azure environment. You can use Backup center to efficiently manage backups spanning multiple workload types, vaults, subscriptions, regions, and Azure Lighthouse tenants."

As you can see, Backup center can be used to see manage:

- Backup instances

- Backup policies

- Vaults

- Monitor and report on backup jobs

- Compliance (ie Azure Virtual Machines that are not configured for backup)

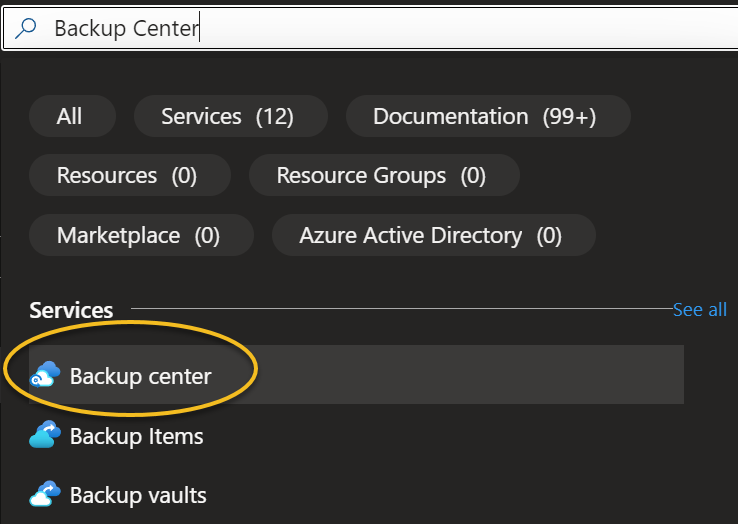

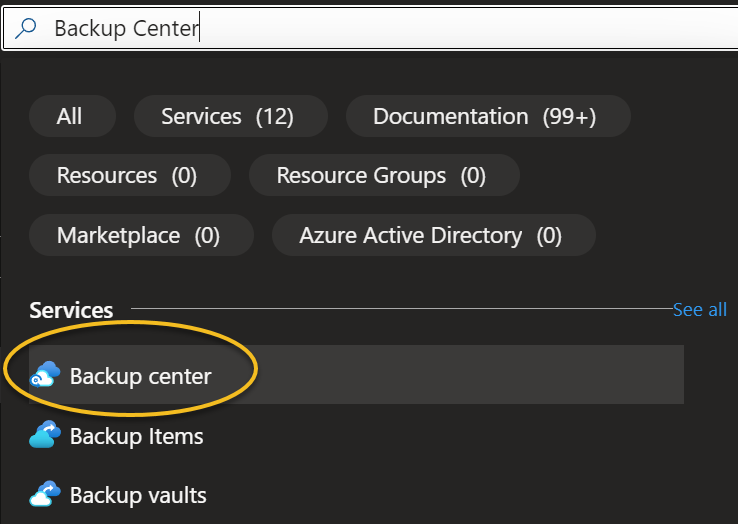

You can find the Backup Center directly in the Azure Portal.

We can create and manage these resources by ourselves, but throughout this article, we will refer back to the Backup Center, to take advantage of the single pane of glass and integrate these resources.

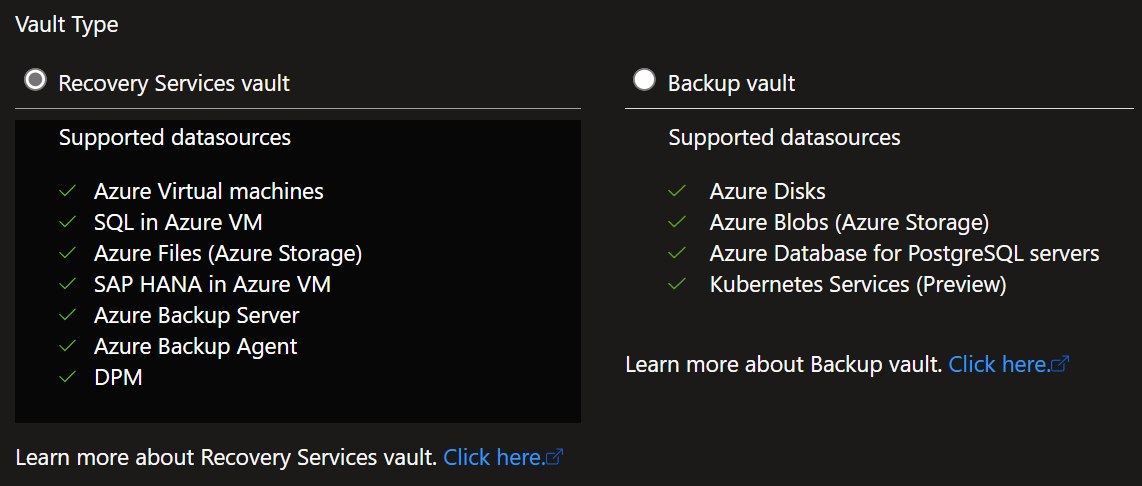

Create Vault

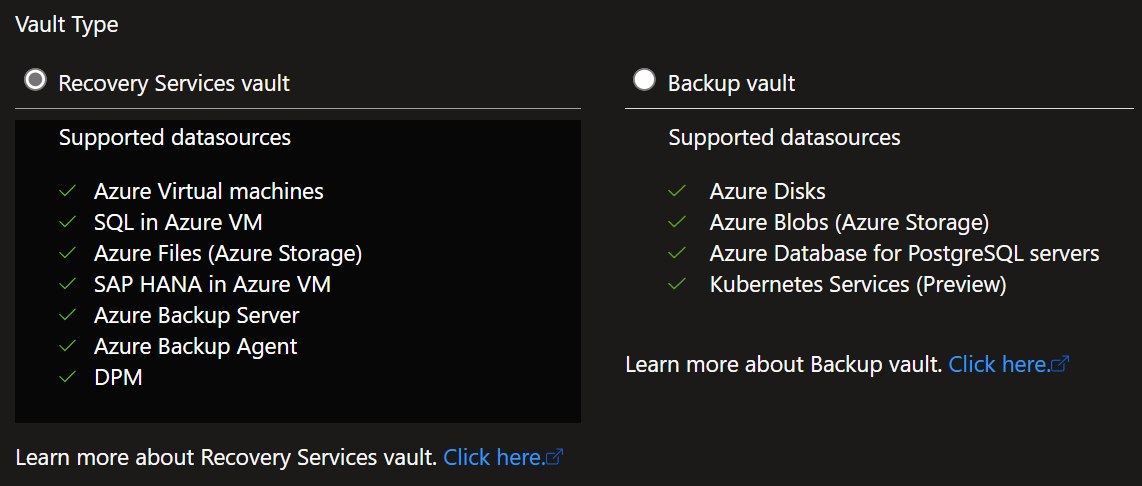

In Microsoft Azure, there are two types of Vaults that the Backup center works with. These vaults are:

Depending on your requirements depends on which Vault you will need to create (for our purposes, we will need the Recovery Services vault); Backup Center makes it remarkably easy to configure a new vault and select the right vault type by using the wizard.

Please refer to: Support matrix for Azure Backup for further information.

- Navigate to Backup Center

- Click on Vaults

- Click + Vault

- Select Recovery Services vault

- Select Continue

- Specify a location and Resource Group to house your Recovery Services vault

- Specify your vault name (abbreviation examples for Azure resources)

- Click Next: Vault properties

Immutability:

I talked a bit about immutability in another blog article: You Can't Touch This: How to Make Your Azure Backup Immutable and Secure. Essentially an immutable vault, prevents unauthorised changes and restore point deletions, for this article, we will enable it to prevent unintended or malicious data loss (keep in mind with immutable vaults, reducing retention of recovery points is not allowed).

- Check enabled immutability, and click Next: Networking.

- We can join our Recovery Services vault to our private network using private endpoints, forcing Azure Backup and Site Recovery to traverse a private network, for the purposes of this article, we will skip it. Click Next: Tags

- Enter in Tags (tags useful for a Recovery Service vault, could be: Application, Support Team, Environment, Cost Center, Criticality)

- Click Review + Create

If we navigate back to the Backup Center and then Vaults (under Manage), we will be able to see the newly created vault.

We now have our Backup solution provisioned for the Cloud Administrator to use, but we next need to define the policies for the backup.

Create Backup Policies

Now that we have our Recovery Services vault, we need to create backup policies; these backup policies will help define the frequency of backups, the retention (Daily, weekly, monthly, yearly) and vault tiering, which enables the Recovery Services Vault to move recovery vaults to an archive tier (slower to restore, but can be cheaper overall, for those long retention policies).

Backup policies are very organisation-specific and can depend a lot on operational and industry requirements; some industries have a legal obligation to store their backups for a certain number of years, the Azure compliance center documentation may help, around security and data requirements, make sure your backup policies are understood by the business you are working with.

For Contoso, we have the following requirements:

| Resource | Daily | Weekly | Monthly | Yearly | Snapshot Retention (Hot) |

|---|

| Critical Application DB - Prod | 7 days - Every 4 Hours | 4 weeks | 6 months | 7 years | 5 days |

| File Server- Prod | 7 days - Every 4 Hours | 6 weeks | 6 months | 7 years | 5 days |

| Web Application VM - Dev | 20 days | 8 weeks | 12 months | 2 years | 2 days |

| Database Server - Dev | 30 days | 8 weeks | 12 months | 2 years | 2 days |

There are a few things to call out here:

- We can see that for Development, items need to be retained for 2 years

- For Production, its 7 years

- Snapshots need to be stored for 5 days and 2 days to allow fast restore

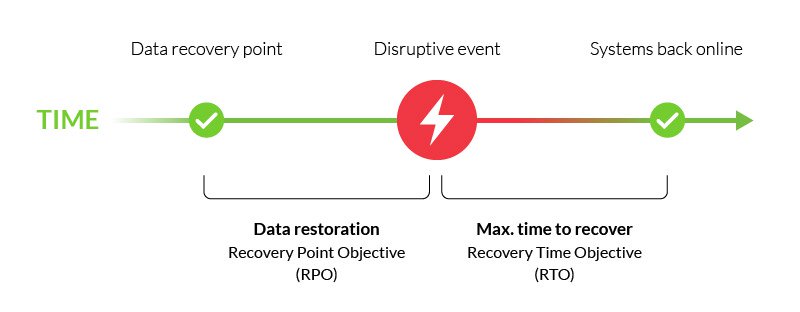

- Production requires a backup to be taken every 4 hours to reduce RTO (Recovery point objective)

If we take a look at the Snapshot retention, we can leverage Instant restore snapshots, to restore the workloads, quickly from the previous 5 days, reducing our time RTO (recovery time objective), and overall impact of an outage or restore, by storing the snapshots locally (as close to the original disk) without putting it (waiting for it) into archive (slower disk), this will incurr more cost, but dramatically reduces restores time. I recommend always keeping a few Instant restore snapshots available for all production systems.

Let us create the policies (we will only create one policy, but the same process can be used to create the others).

- Navigate to Backup Center

- Click on Backup policies

- Click + Add

1 .Select Azure Virtual Machines

- Select the Vault created earlier

- Click Continue

- As this will be the policy for the Critical Application DB, we will specify: Enhanced (due to the multiple backups, Zone-redundant storage (ZRS) snapshots)

- Specify a Policy name, ie Tier-1-Prod-AppDB

- Specify Frequency to: Hourly, Schedule to Every 4 Hours, and Duration: 24 Hours

- Specify Retain instance recovery snapshots for '5' days

- Update Daily Backup point to: 7 days

- Configure the Weekly backup point to occur every Sunday and retain for 4 weeks

- Configure the Monthly backup point to occur on the first Sunday of the month and retain for 6 months

- Configure the yearly backup point to occur on the first Sunday of the year and retain for 7 years

- Select enable Tiering, and specify Recommended recovery points

- You can also update the Resource Group name used to store the Snapshots.

- Click Create

Note: If you want, you can repeat the same process to create any others you need. Remember, with immutable vaults, you cannot reduce the retention (but you can add), so if starting for the first time, keep the retention low until you have a clear direction of what is required. A workload can use the same policy. A Standard (not Enhanced) policy may be all you need for Development workloads.

Add Virtual Machines

Now that we have our Recovery Services Vault and custom backup policies, it's time to add our Virtual Machines to the backup! To do this, we can use the Backup center to view Virtual Machines that are not getting backed up, and then configure the backup.

- Navigate to Backup Center

- Click on Protectable data sources

- Click on the ellipsis of a Virtual Machine you want to backup

- Click on Backup

- Select the appropriate Backup vault and policy

- Click Enable backup

Although cross-region restore is now supported on a Recovery Services vault, the second region is read-only (RA-GRS), so make sure you have a backup recovery vault created in the region (and subscription) of the virtual machines you are trying to protect. Backup center, can see all Recovery services vaults across multiple regions and subscriptions that you have access to.

Once added, the Virtual Machine will now get backed up according to the specified policy.

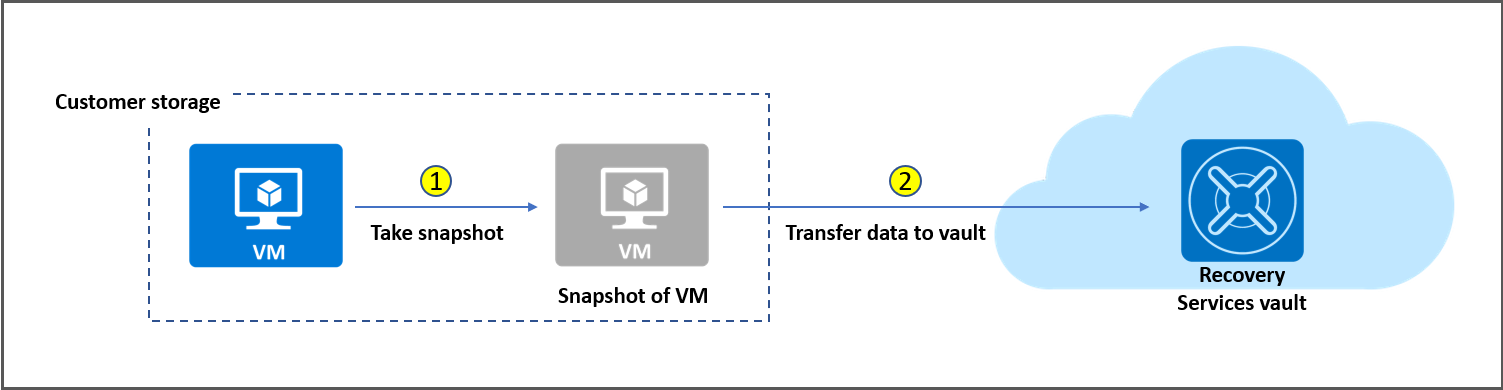

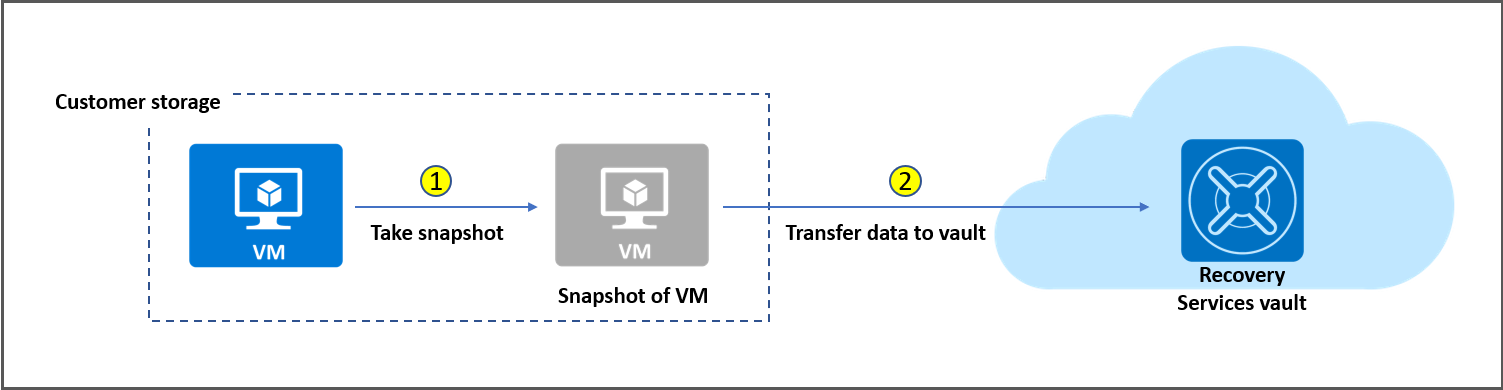

Its worth noting that you can backup a Virtual Machine if it is deallocated, but it will Crash-consistent (Only the data that already exists on the disk at the time of backup is captured and backed up, and it triggers a disk check during recovery) compared to Application consistent, which is more application and OS aware, so can prepare to the OS and applications for the backups to make sure that everything is written successfully to the disk ahead of the backup. You can read more about Snapshot consistency.

Monitor Backups

Now that we have our Recovery Services Vault, policies and protected items (backed up Virtual Machines), we need to monitor to make sure that the backups are working. Backup center gives us a complete view of Failed, In Progress, and Completed jobs in the overview pane, which is excellent for a quick view of the status across subscriptions and regions.

But you may want something a bit more detailed; let us look into some of the options for monitoring your backups.

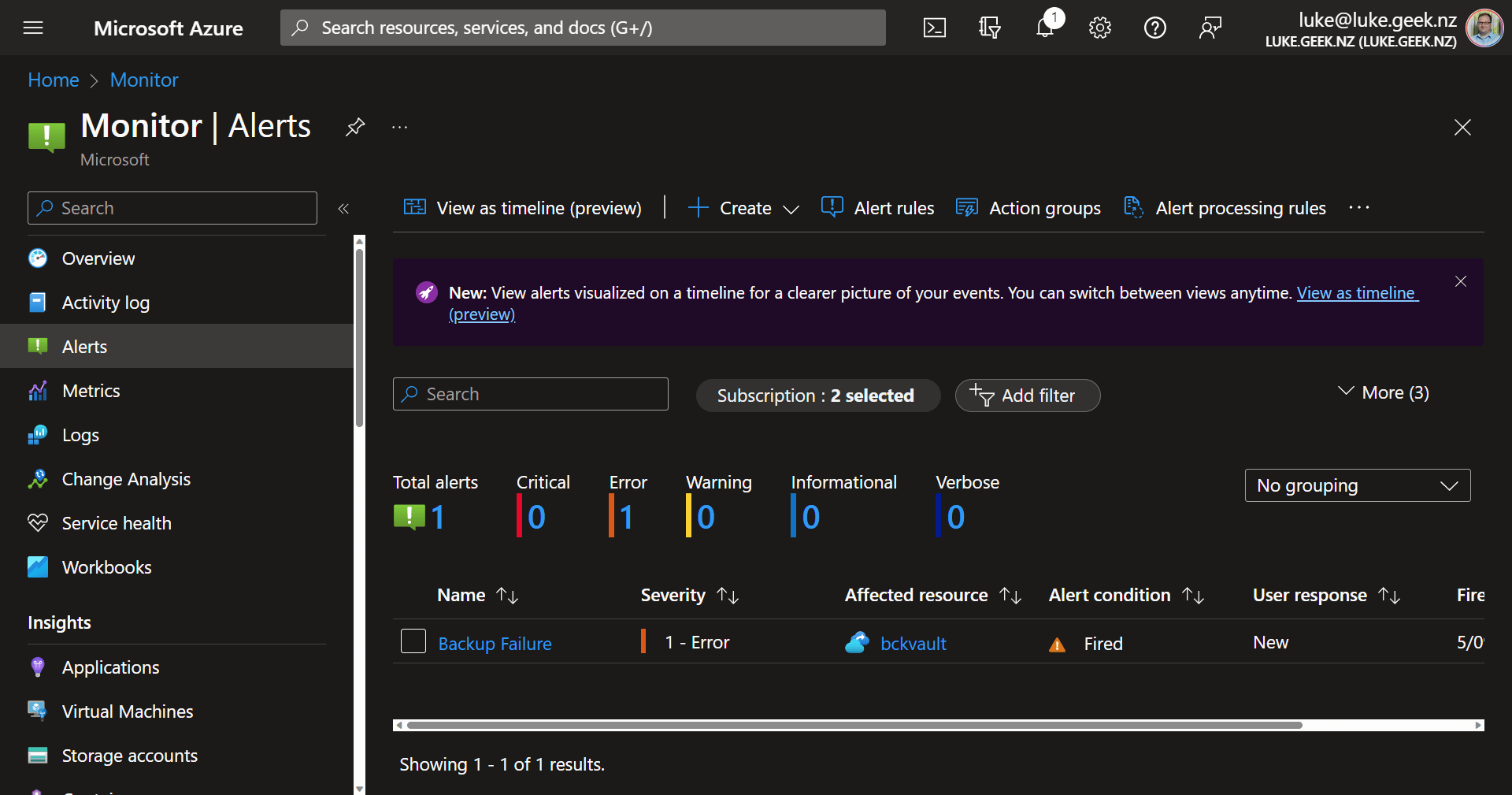

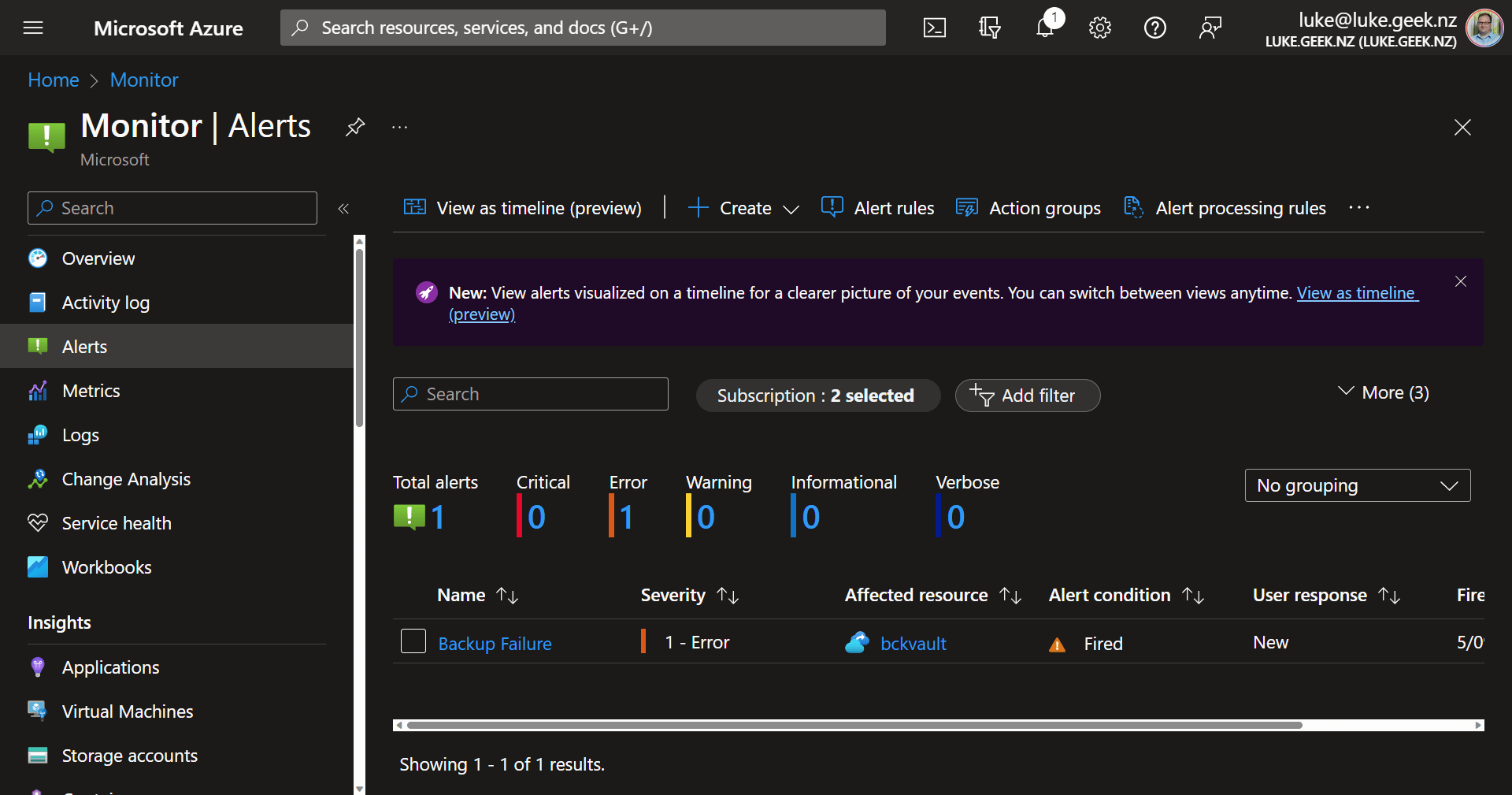

Alerts

As part of operational checks, you may want assurance or a ticket raised if there's an issue with a backup; one of the ways to achieve this is to set up an email alert that will send an email if a backup fails.

By default, these types of alerts are enabled out-of-the-box on a recovery services vault; examples of alerts can be found here: Azure Monitor alerts for Azure Backup, these can be displayed in the Recovery Services Vault or Backup Center blade, immediately.

If a destructive operation, such as stop protection with deleted data is performed, an alert is raised, and an email is sent to subscription owners, admins, and co-admins even if notifications aren't configured for the Recovery Services vault.

| Type | Description | Example alert scenarios | Benefits |

|---|

| Built-in Azure Monitor alerts (AMP alerts) | These are alerts that will be available out-of-the-box for customers without needing additional configuration by the customer. | Security scenarios like deleting backup data, soft-delete disabled, vault deleted, etc. | Useful for critical scenarios where the customer needs to receive alerts without the possibility of alerts being subverted by a malicious admin. Alerts for destructive operations fall under this category |

| Metric alerts | Here, Azure Backup will surface backup health-related metrics for customers' Recovery Services vaults and Backup vaults. Customers can write alert rules on these metrics. | Backup health-related scenarios such as backup success alerts, restore success, schedule missed, RPO missed, etc. | Useful for scenarios where customers would like some control over the creation of alert rules but without the overhead of setting up LA or any other custom data store. |

| Custom Log Alerts | Customers configure their vaults to send data to the Log Analytics workspace and write alert rules on logs. | 'N' consecutive failed backup jobs, Spike in storage consumed, etc. | Useful for scenarios where there is a relatively complex, customer-specific logic needed to generate an alert. |

Backup alerts are supported by Azure Monitor, so under Azure Monitor, and Alerts pane you can see all your other alerts, including Azure Backup alerts from a single pane.

If you want to configure notifications via emails for other types of alerts, such as Backup failures, we can use Azure Monitor Action Groups and Alert processing rules, to let us know, without having to login to the Azure Portal directly, so let us create an email alert.

To do this, we will create an Action Group and Alert Processing rule.

| Component | Description |

|---|

| Action Group | An Action Group is a collection of actions or tasks that are executed automatically when an alert that matches specific criteria is triggered. Actions can include sending notifications, running scripts, triggering automation, or escalating the alert. Action Groups help streamline incident response and automate actions based on the nature and severity of an alert. |

| Alert Processing Rule | An Alert Processing Rule is a set of conditions and criteria used to filter, categorize, or route incoming alerts within a monitoring or alerting system. These rules enable organizations to define how alerts are processed, prioritize them, and determine the appropriate actions to take when specific conditions are met. Alert Processing Rules are crucial for managing and efficiently responding to alerts. |

- Navigate to Backup Center

- Click on Alerts

- Click on Alert Processing rule

- Click + Select Scope

- Click All Resource Types, and Filter by: Recovery Services Vault

- Select your Recovery Services vault, you would like to alert on

- Click Apply

- Click on Filter, and change: Alert condition = Fired.

- Click Next: Rule Settings

- Click Apply action group

- Click + Create action group

- Select the Subscription, Resource Group to store your action group (i.e. monitor resource group)

- Give the Action Group a name, and give it a Display name

- Specify Notification type (ie Email/SMS message/push/voice)

- For this article, we will add an Email (but you can have it ring a number, push a notification to the Azure Mobile App)

- Enter in your details, then click Next: Actions

- In the Actions pane, is where you can trigger automation, such as Azure Logic Apps, Runbooks, ITSM connections, Webhooks etc., to help self-remediate the issues, or better notifications, such as a Logic App that posts in a Teams channel when an alert is fired, or a wehbook that triggers a webpage to update. In this example, we will leave it empty and rely on email notifications and click Next: Tags

- Enter any Tags and click Review + create

- Make note of Suppress Notifications; this could be handy during scheduled maintenance windows where backups may fail due to approved work.

- Once the Action Group has been created, click Next: Scheduling

- Select Always

- Click Next: Details

- Enter in a Resource Group, for the Alert processing rule to be placed

- Enter in Rule name, description and click Review + Create

As you can see Azure Monitor integration into backups, gives you some great options to keep on top of your backups, and integrate with other systems, like your IT Service Management toolsets.

Azure Site Recovery

Azure Site Recovery (ASR) can be used to migrate workloads, across Availability Zones and regions, by replicating the disks of a Virtual Machine to another region (GRS) or zone (ZRS), in fact Azure Resource Mover uses Azure Site Recovery when moving virtual machines between regions. Azure Site Recovery can also help with migrating workloads outside of Azure, to Azure, for disaster recovery.

When looking at migrating workloads, to Azure from the VMWare stack, consider the Azure Site Recovery Deployment Planner for VMware to Azure to assist.

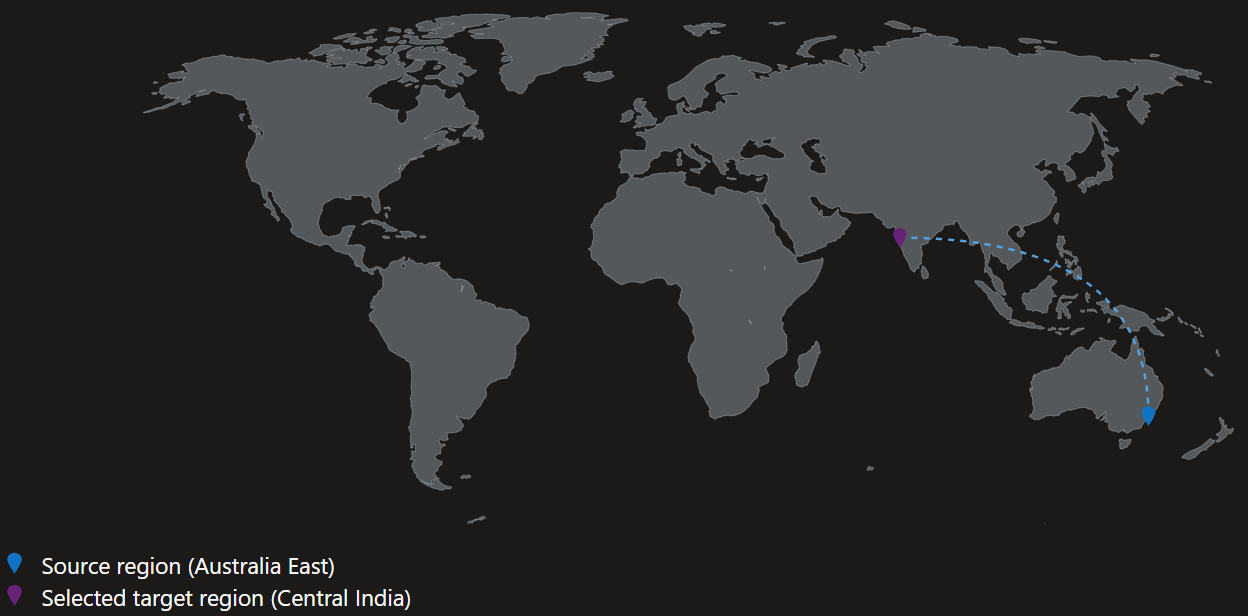

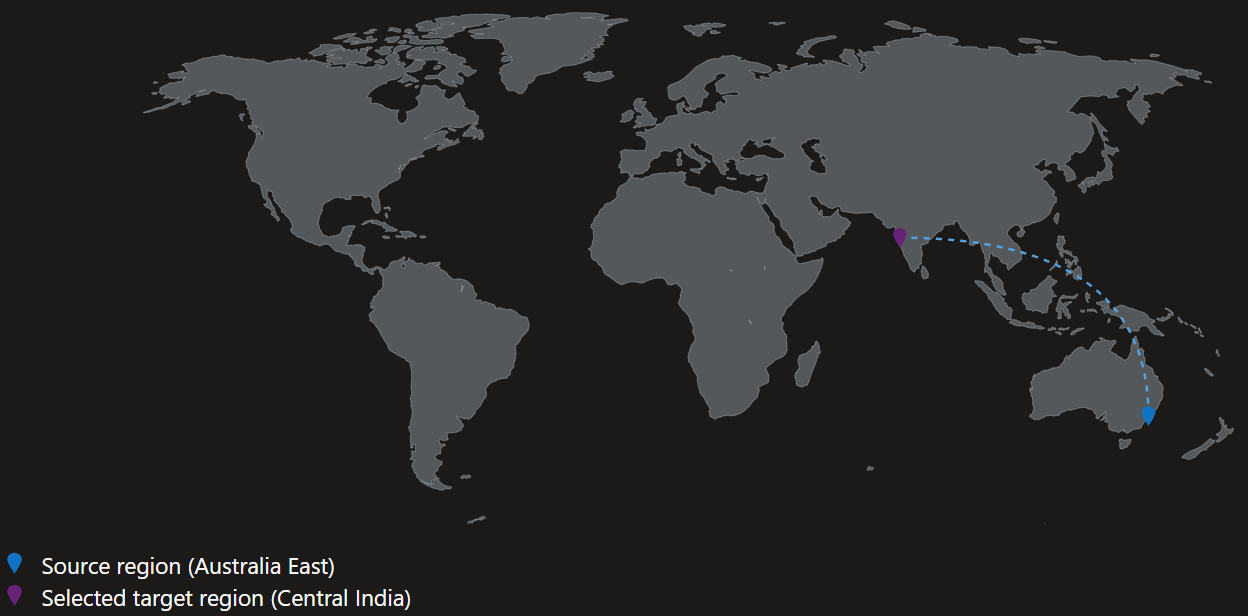

For the purposes of this guide, we will achieve disaster recovery of our virtual machine, by replicating it to another region (i.e. from Australia East to Central India).

Azure Recovery Services contributes to your BCDR strategy:

Site Recovery service: Site Recovery helps ensure business continuity by keeping business apps and workloads running during outages. Site Recovery replicates workloads running on physical and virtual machines (VMs) from a primary site to a secondary location. When an outage occurs at your primary site, you fail over to a secondary location, and access apps from there. After the primary location is running again, you can fail back to it.

Backup service: The Azure Backup service keeps your data safe and recoverable.

Just as important (if not more) than the technology to enable this, clear business requirements and preparation is paramount for a successful disaster recovery solution, I highly recommend the Azure Business Continuity Guide. Supplied by the Microsoft Fastrack team, this guide includes resources to prepare for thorough disaster recovery plan.

The key to successful disaster recovery is not only the workloads themselves but supporting services, such as DNS, Firewall rules, connectivity etc., that need to be considered, These are out of the scope of this article but the following Microsoft Azure architecture references are worth a read:

For Azure Site Recovery to work, it relies on a mobility service running within the Virtual Machine to replicate changes, the source virtual machine needs to be on to replicate the changes.

When you enable replication for a VM to set up disaster recovery, the Site Recovery Mobility service extension installs on the VM and registers it with Azure Site Recovery. During replication, VM disk writes are sent to a cache storage account in the source region. Data is sent from there to the target region, and recovery points are generated from the data.

Azure Site Recovery, does not currently support virtual machines protected with Trusted Launch.

Enable Azure Site Recovery

For now, we have 'VM1' a Ubuntu workload, running in Australia East, with a Public IP, that we will failover to Central India. The source Virtual Machine can be backed up normally by a vault in the source region, and replicated to another vault in the destination region.

Azure Site Recovery has a specific Operating System and Linux kernel support. Make sure you confirm that your workloads are supported.

- Navigate to Backup Center

- Click on Vaults

- Create a new Recovery Services vault in your DR (Disaster Recovery region - ie Central India)

- Click on Site Recovery

- Under Azure Virtual Machines, click on: Enable replication

- Specify the source Virtual Machine, you wish to migrate

- Click Next

- Select your source Virtual Machine

- Click Next

- Select the target location (i.e. Central India)

- Select the target Resource Group

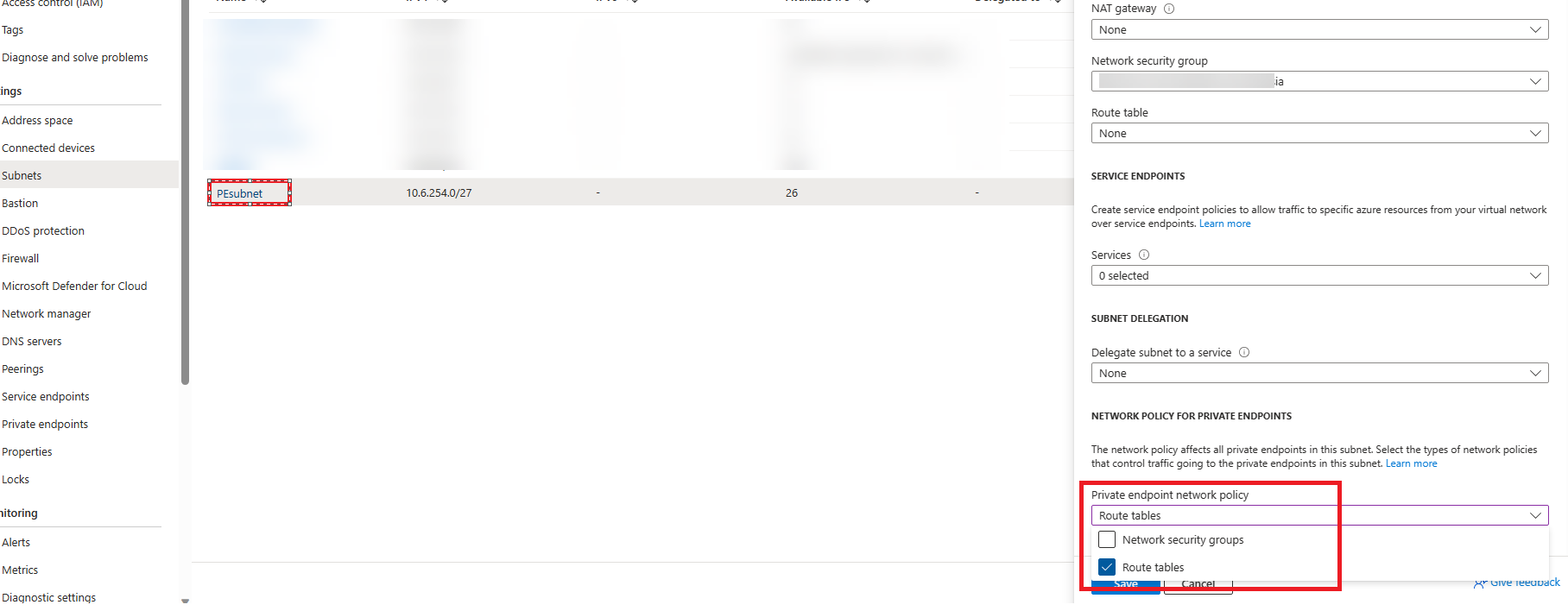

- Select the Target Virtual Network (create one if it doesn't exist)

- Select the target subnet

- Under the Storage, you can consider changing the replica disk to Standard, to reduce cost (this can be changed later).

- Select a cache storage account (The cache storage account is a storage account used for transferring the replication data before its written to the destination disk)

- You can then adjust the availability zone of the destination virtual machine

- Click Next

- Here we can define a Replication Policy (a replication policy in Azure Site Recovery is a set of rules and configurations that determine how data is replicated from the source environment to the target environment (Azure) in case of a disaster or planned failover, such as retention, ie you can restore a point within the retention period) we will leave the default 24-hour retention policy.

- We can specify a Replication Group, An example of a replication group is application servers that need to be consistent with each other, in terms of data ( replication policy in Azure Site Recovery is a set of rules and configurations that determine how data is replicated from the source environment to the target environment (Azure) in case of a disaster or planned failover.).

- Specify an automation account to manage the mobility service, and we will leave the update extension to be ASR (Azure Site Recovery) managed.

- Click Next

- Click Enable replication

- At the Recovery Services Vault, under Site Recovery Jobs you can monitor the registration, registration and initial replication can take 30-60 minutes to install the agent and start the replication.

Failover to the secondary region using Azure Site Recovery

Once your virtual machine has been replicated in the secondary region. you can do a Failover, or Test failover. A Test failover is recommended, in your DR testing, and application testing.

| Aspect | Failover | Test Failover |

|---|

| Purpose | To switch to a secondary site during a disaster or planned maintenance event. | To validate your disaster recovery plan without impacting production. |

| Impact on Production | Disrupts production services as the primary site becomes unavailable during the failover process. | No impact on production services; the primary site remains operational. |

| Data Replication | Replicates data from primary to secondary site, making it the active site during the failover. | Uses the same replicated data but doesn't make the secondary site the active site; it's for testing purposes only. |

| Recovery Time | Longer recovery time, as it involves setting up and activating the secondary site. | Faster recovery time, as it doesn't require making the secondary site the active site. |

| Data Consistency | Ensures data consistency and integrity during the failover process. | Ensures data consistency for testing but doesn't make the secondary site the primary site. |

| Cost | May incur additional costs due to the resources activated at the secondary site. | Typically incurs minimal additional costs as it's for testing purposes. |

| Use Cases | Actual disaster recovery scenarios or planned maintenance events. | Testing and validating disaster recovery procedures, training, and compliance. |

| Post-Operation | The secondary site becomes the new primary site until failback is initiated. | No change to the primary site; the secondary site remains inactive. |

| Rollback Option | Failback operation is required to return to the primary site once it's available. | No need for a rollback; the primary site remains unaffected. |

- Navigate to your destination Recovery Services Vault

- Click on REplicated Items

- Select the Virtual Machine you wish to recover in your second region

- Select Test Failover (or Failover, depending on your requirements)

- Select your Recovery point and destination Virtual network

- Select Failover

- If it is a test failover, you can then Clean up your Test failover (deleted replicated item) after you have tested

Azure Policies

Automatically, mapping of Virtual Machines, to backup policies can be done using Azure Policy.

Azure policies such as:

- Azure Backup should be enabled for Virtual Machines

- Configure backup on virtual machines without a given tag to an existing recovery services vault in the same location

- Disable Cross Subscription Restore for Backup Vaults

- Soft delete should be enabled for Backup Vaults

More, are built-in to the Azure policy engine and can be easily assigned, across subscriptions and management groups, found in the Backup Center.

- Navigate to Backup Center

- Click on Azure policies for backup

- Click on a policy and click Assign

You can find a list of custom and built-in policies at the AzPolicyAdvertizerPro website.

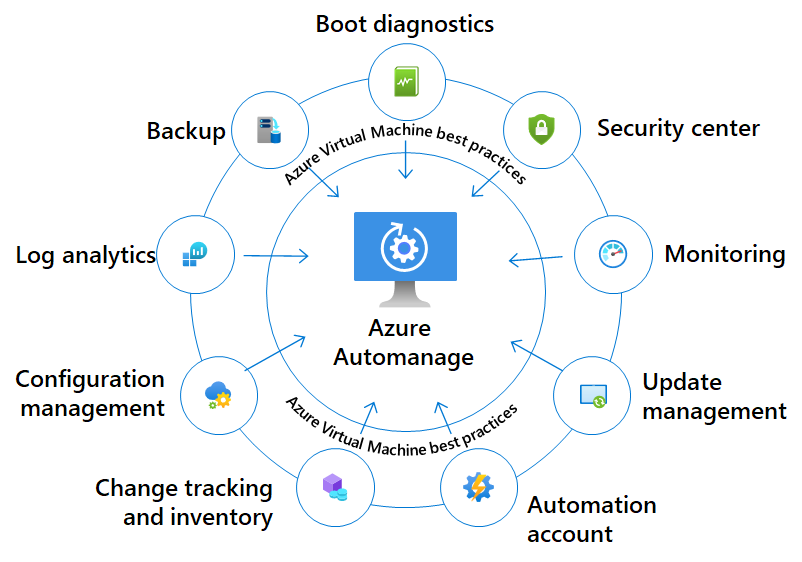

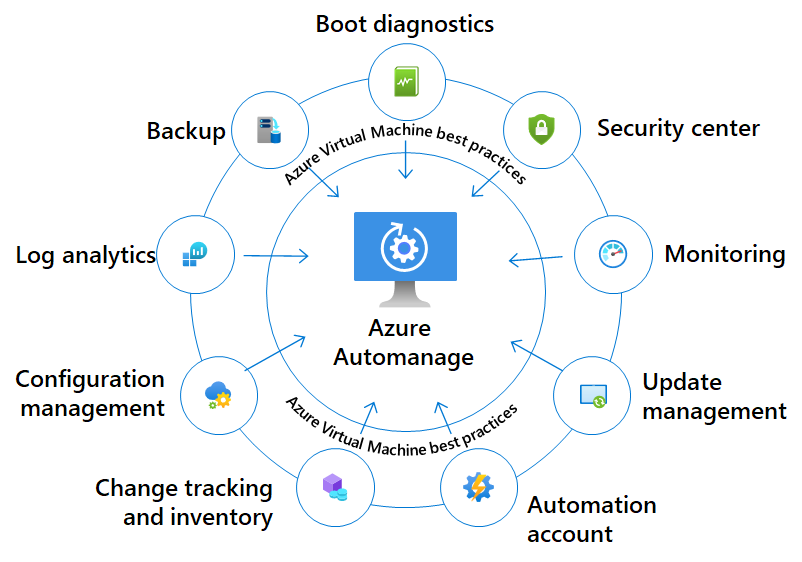

Azure Automanage can be used alongside Azure policy, to onboard Virtual Machines, into backup, patching etc automatically, with reduced manual intervention, and although not directly part of this article, what you have learned can be used to develop your automanage profiles.

As you can see, there are a range of cmdlets we have to work with.

As you can see, there are a range of cmdlets we have to work with.