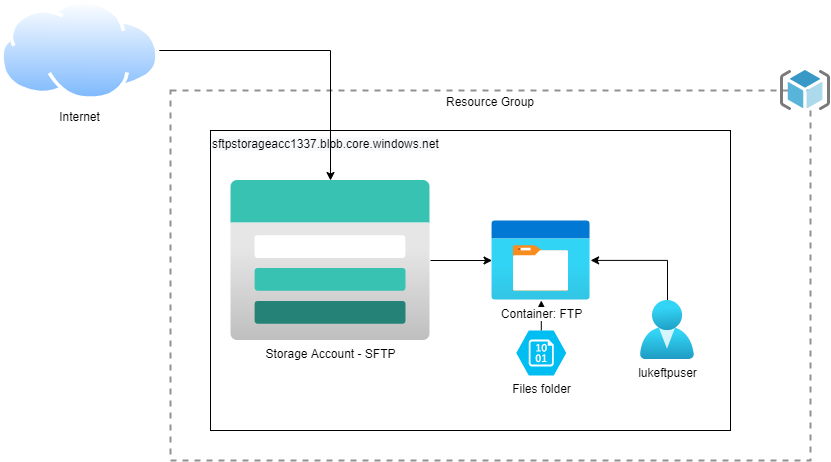

SSH File Transfer Protocol (SFTP) support is now supported in Preview for Azure Blob Storage accounts with hierarchical namespace enabled.

Although tools such as Storage Explorer, Data Factory, AzCopy allows a copy to and from Azure storage accounts, sometimes your applications need more traditional integration, so SFTP is a welcome addition to the Microsoft Azure ecosystem, which in some cases removes the need for additional Virtual Machine(s).

This support enables standard SFTP connectivity to an Azure Storage account. As an Azure PaaS (Platform as a Service) resource, it offers additional flexibility, reduces operational overhead, and increases redundancy and scalability.

We will run through the initial setup of the Azure Storage account using the Azure Portal.

SFTP using an Azure Storage account does not support shared access signature (SAS) or Microsoft Entra ID (Azure AD) authentication for connecting SFTP clients. Instead, SFTP clients must use a password or a Secure Shell (SSH) private/public keypair.

Before we head into the implementation, just a bit of housekeeping, this is currently still in Preview at the time this post was written; the functionality MAY change by the time it becomes GA (Generally Available).

During the public preview, the use of SFTP does not incur any additional charges. However, the standard transaction, storage, and networking prices for the underlying Azure Data Lake Store Gen2 account still apply. SFTP might incur additional charges when the feature becomes generally available. As of the time of the preview SFTP support is only avaliable in certain regions.

You can connect to the SFTP storage account by using local (to the SFTP storage account) SSH public-private keypair or Password (or both). You can also set up individual HOME directories (because of the hierarchical namespace, these are folders not containers) for each user (maximum 1000 local user accounts).

Creating an Azure Storage account for SFTP

This article assumes you have an Azure subscription and rights to create a new Storage account resource, however if you have an already existing storage account the following pre-requisites are required:

- A standard general-purpose v2 or premium block blob storage account. You can also enable SFTP as you create the account.

- The account redundancy option of the storage account is set to either locally-redundant storage (LRS) or zone-redundant storage (ZRS); GRS is not supported.

- The hierarchical namespace feature of the account must be enabled for existing storage accounts. To enable the hierarchical namespace feature, see Upgrade Azure Blob Storage with Azure Data Lake Storage Gen2 capabilities.

- If you're connecting from an on-premises network, make sure that your client allows outgoing communication through port 22. The SFTP uses that port.

Because the SFTP functionality is currently in Private Preview, Microsoft has asked that anyone interested in the SFTP Preview fill out a Microsoft Forms:

This MAY be required before proceeding to the following steps; initially, I believe this was required - but there appears to have been a few people who I know have registered the feature without the form - either way, the SFTP Public Preview Interest form, is a good opportunity to supply your use-case information to Microsoft directly, to help improve the nature of the service going forward.

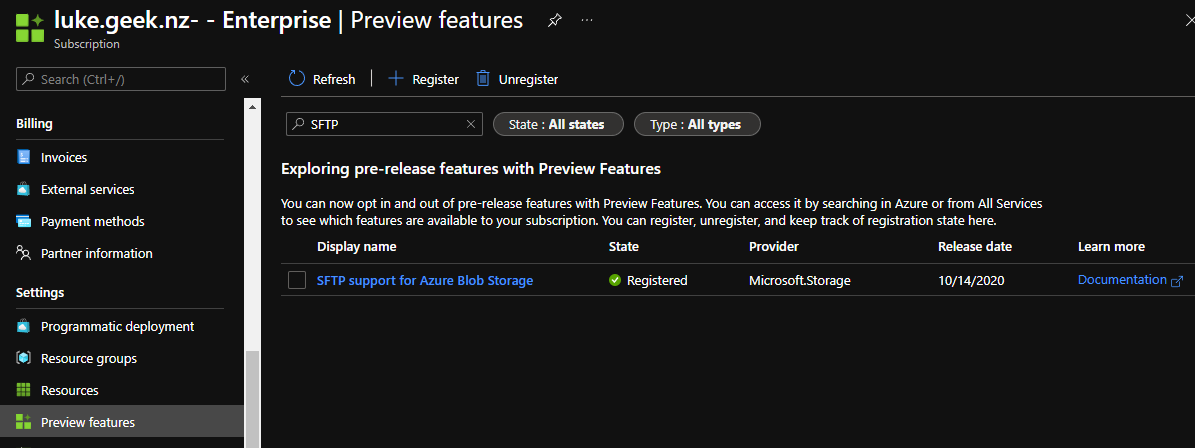

Registering the Feature

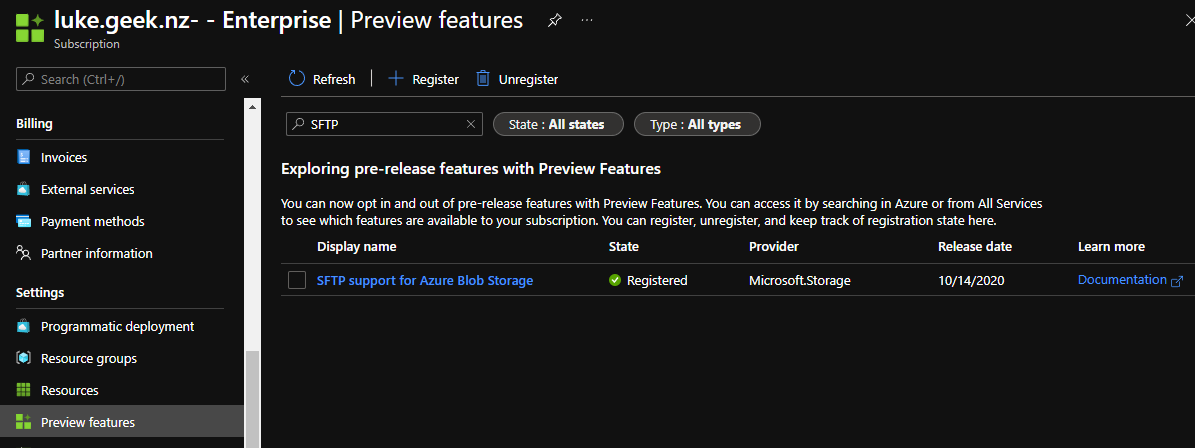

To create an Azure Storage account that supports SFTP - we need to enable the Preview Feature.

-

Log in to the Azure Portal

-

Navigate to: Subscriptions

-

Select the Subscription that you want to enable SFTP preview for

-

Click on: Preview features

-

Search for: SFTP

-

Click on: SFTP support for Azure Blob Storage and click Register - this may take from minutes to a few days to be registered, as each preview request may need to be manually approved by Microsoft personnel based on the Public Preview Interest form - my feature registration occurred quite quickly, so there is a chance that they either have automated the approvals or I was just lucky.

As you can see in the screenshot below, I had already registered mine:

-

-

You can continue to hit refresh until it changes from: Registering to Registered.

-

While we are here, let's check that the Microsoft.Storage resource provider is registered (it should already be enabled, but it is a good opportunity to check before attempting to create a resource and get a surprise), by clicking on Resource providers in the left-hand side menu and search for: Storage, if it is set to NotRegistered - click on Microsoft.Storage and click Register.

To register the SFTP feature using PowerShell, you can run the following cmdlet:

Register-AzProviderFeature -FeatureName "AllowSFTP" -ProviderNamespace "Microsoft.Storage"

Create the Azure Storage Account

Now that the Preview feature has been registered, we can now create a new Storage account.

- Log in to the Azure Portal

- Click on +Create a resource

- Type in: Storage account and click on the Microsoft Storage account resource and click Create

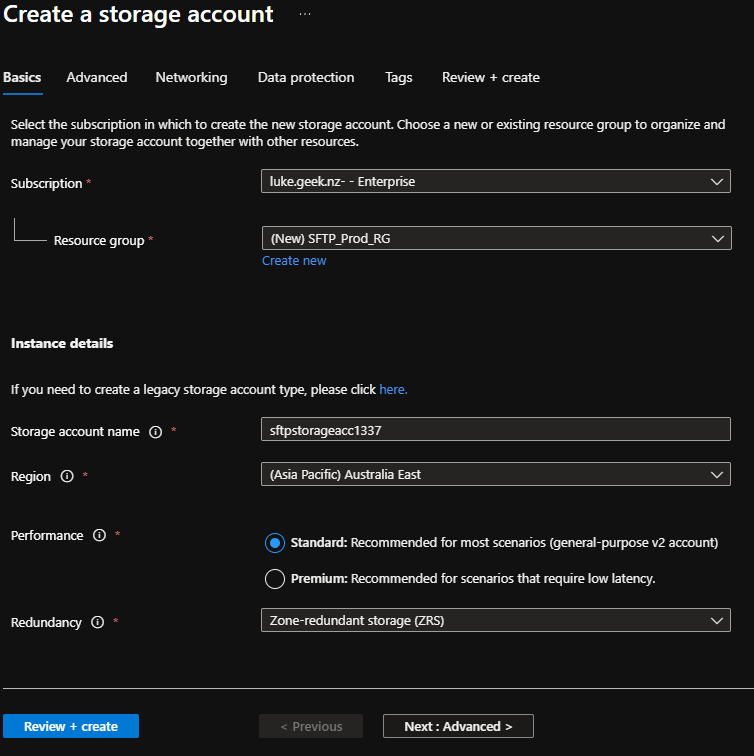

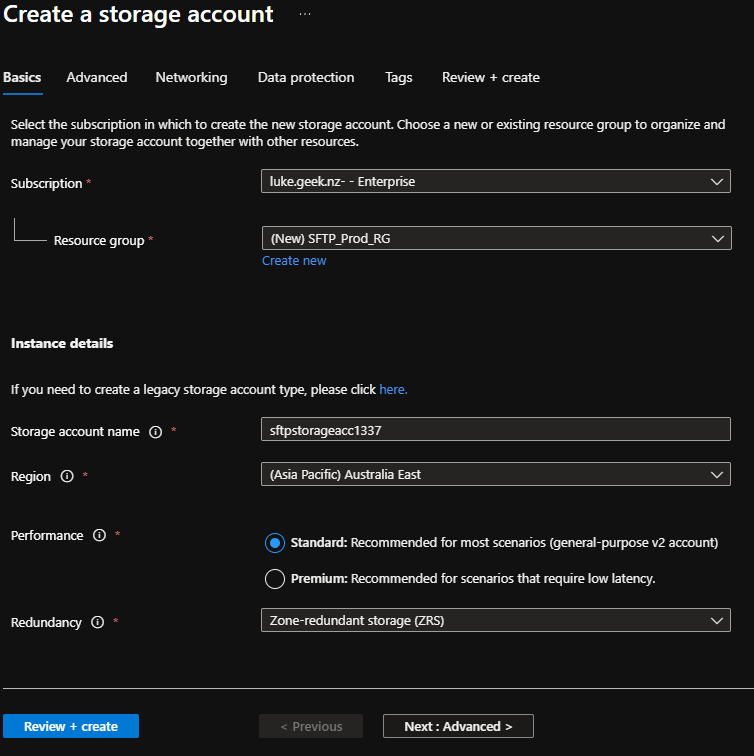

- Select your Subscription you enabled the SFTP feature in earlier

- Select your Resource Group (or create a new resource group) to place your storage account into.

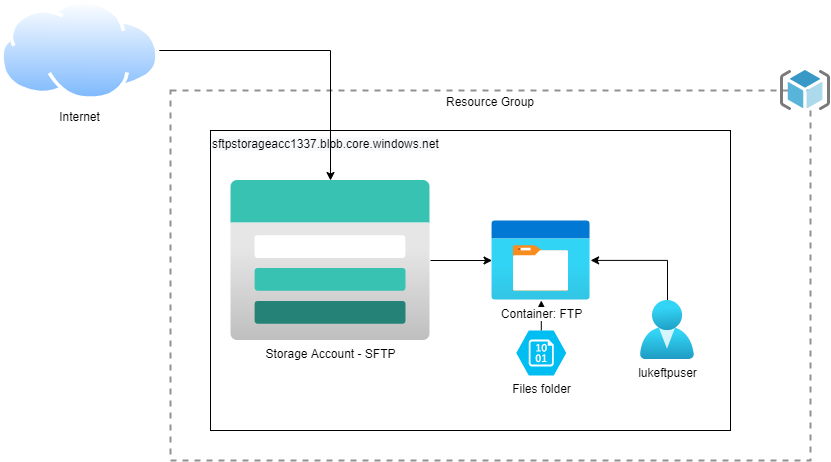

- Select your storage account name (this needs to be globally unique and a maximum of 24 characters), in my example; I am going with: sftpstorageacc1337

- Select your Region; remember that only specific regions currently have SFTP support at the time of this article _.

- Select your performance tier; premium is supported but remember to select Blob, select Standard.

- Select your Redundancy; remember that GRS-R, GRS isn't supported at this time; I will select Zone-redundant storage (ZRS) so that my storage account is replicated between the three availability zones, but you can also select LRS (Locally Redundant Storage).

- Click Next: Advanced

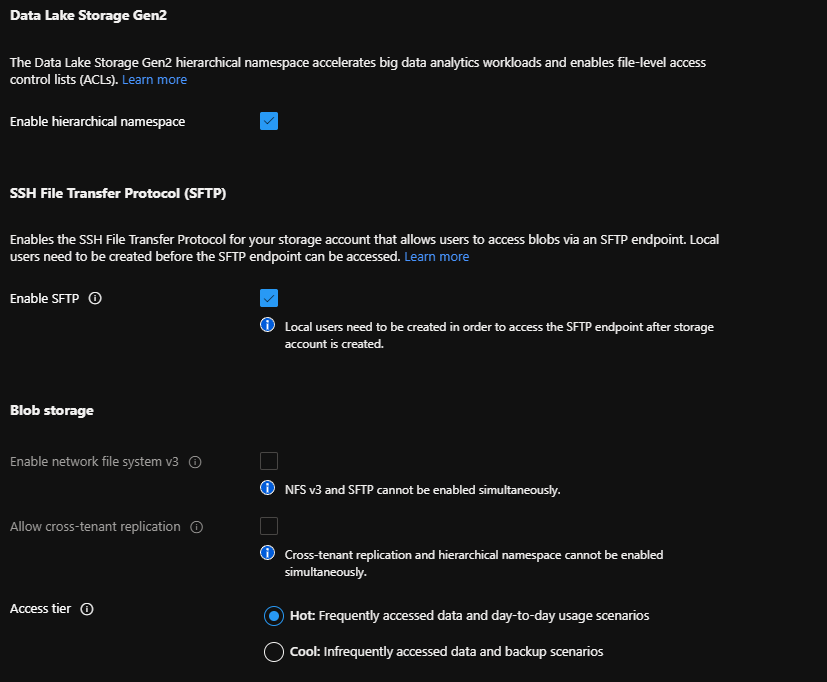

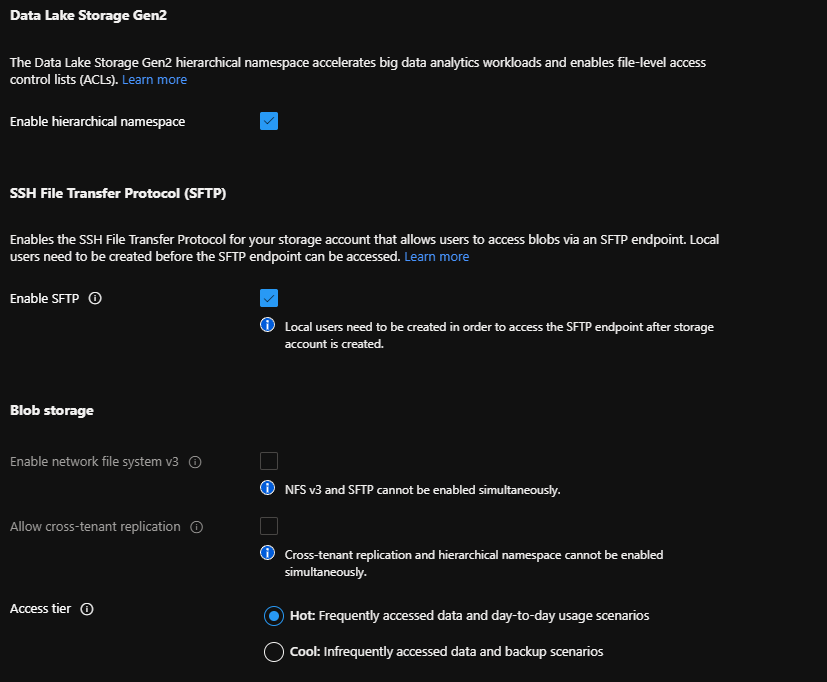

- Leave the Security options as-is and check: Enable hierarchical namespace under the Data Lake Storage Gen2 subheading.

- Click Enable SFTP

- Click: Next: Networking

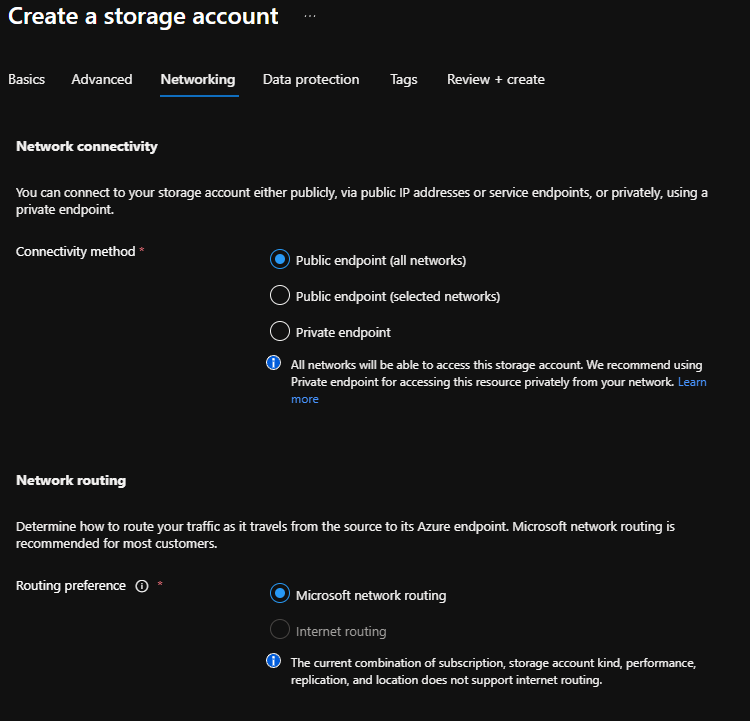

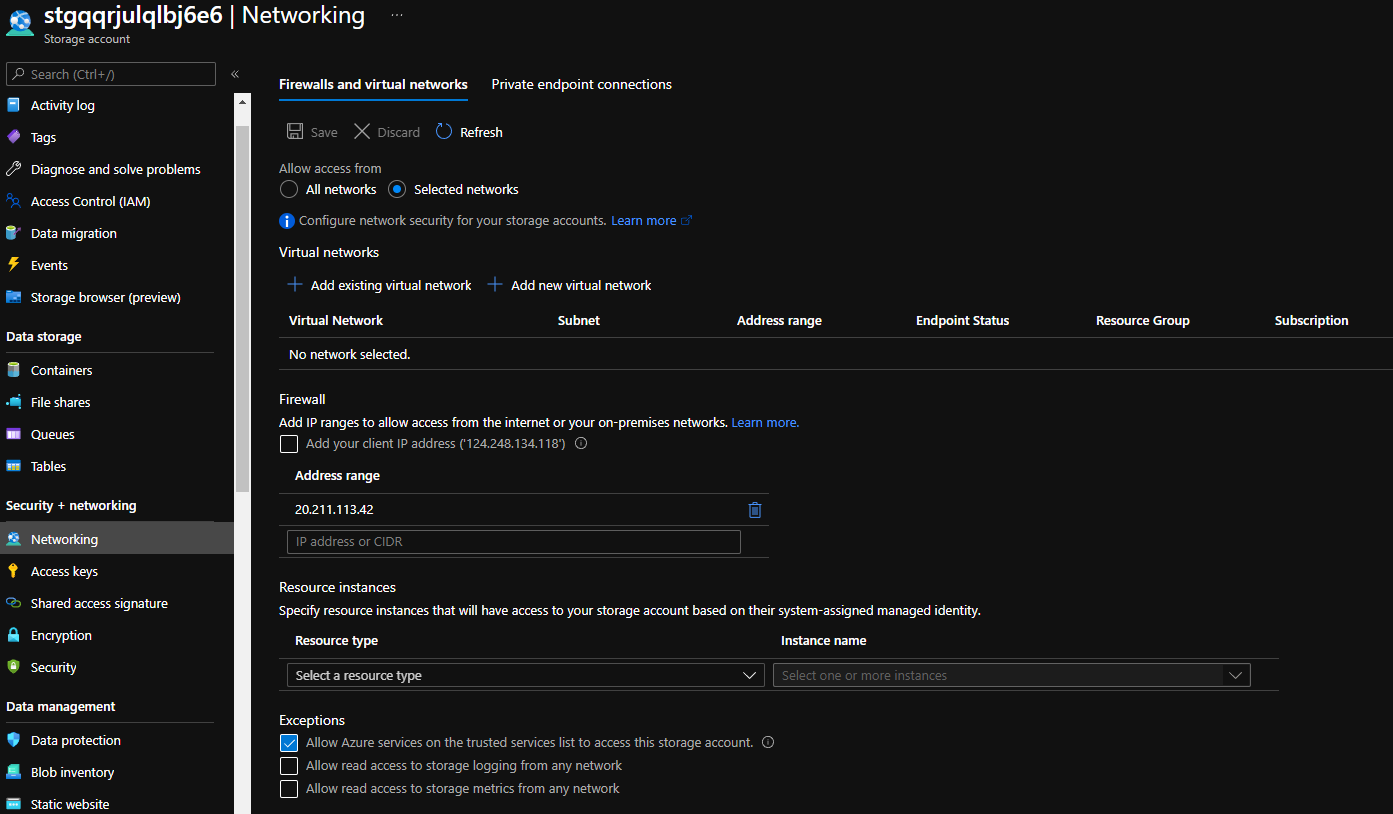

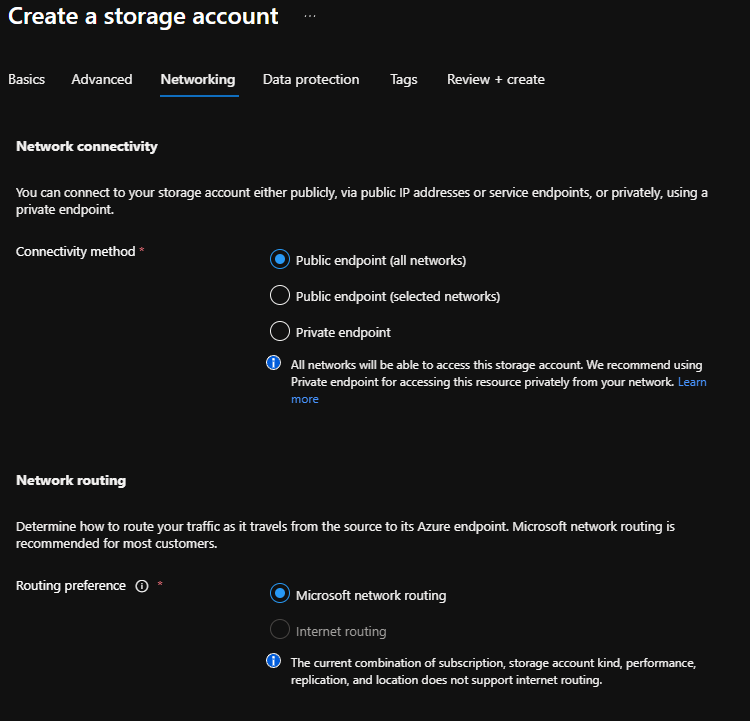

- SFTP supports Private Endpoints (as a blob storage sub-resource), but in this case, I will be keeping Connectivity as a Public endpoint (all networks)

- Click Next: Data Protection

- Here you can enable soft-delete for your blobs and containers, so if a file is deleted, it is retained for seven days until it's permanently deleted; I am going to leave mine set as the default of 7 days and click: Next: Tags.

- Add in any applicable Tags, i.e. who created it, when you created it, what you created it for and click Review + Create

- Review your configuration, make sure that Enable SFTP is enabled with Hierarchical namespace and click Create.

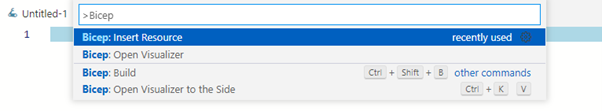

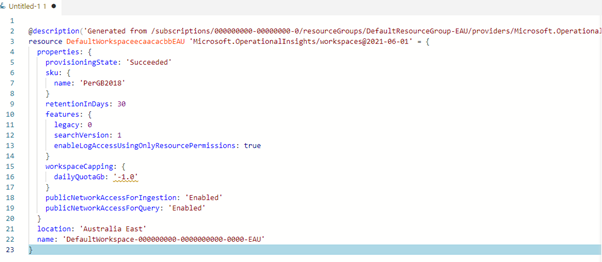

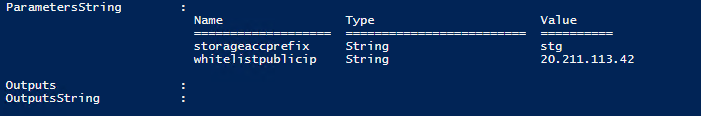

In case you are interested in Infrastructure as Code, here is an Azure Bicep file I created to create a storage account ready for SFTP here that can be deployed to a Resource Group, ready for the next steps:

storageaccount.bicep

param storageaccprefix string = ''

var location = resourceGroup().location

resource storageacc 'Microsoft.Storage/storageAccounts@2021-06-01' = {

name: '${storageaccprefix}${uniqueString(resourceGroup().id)}'

location: location

sku: {

name: 'Standard_ZRS'

}

kind: 'StorageV2'

properties: {

defaultToOAuthAuthentication: false

allowCrossTenantReplication: false

minimumTlsVersion: 'TLS1_2'

allowBlobPublicAccess: true

allowSharedKeyAccess: true

isHnsEnabled: true

supportsHttpsTrafficOnly: true

encryption: {

services: {

blob: {

keyType: 'Account'

enabled: true

}

}

keySource: 'Microsoft.Storage'

}

accessTier: 'Hot'

}

}

Setup SFTP

Now that you have a compatible Azure storage account, it is time to enable SFTP!

- Log in to the Azure Portal

- Navigate to the Storage account you have created for SFTP and click on it

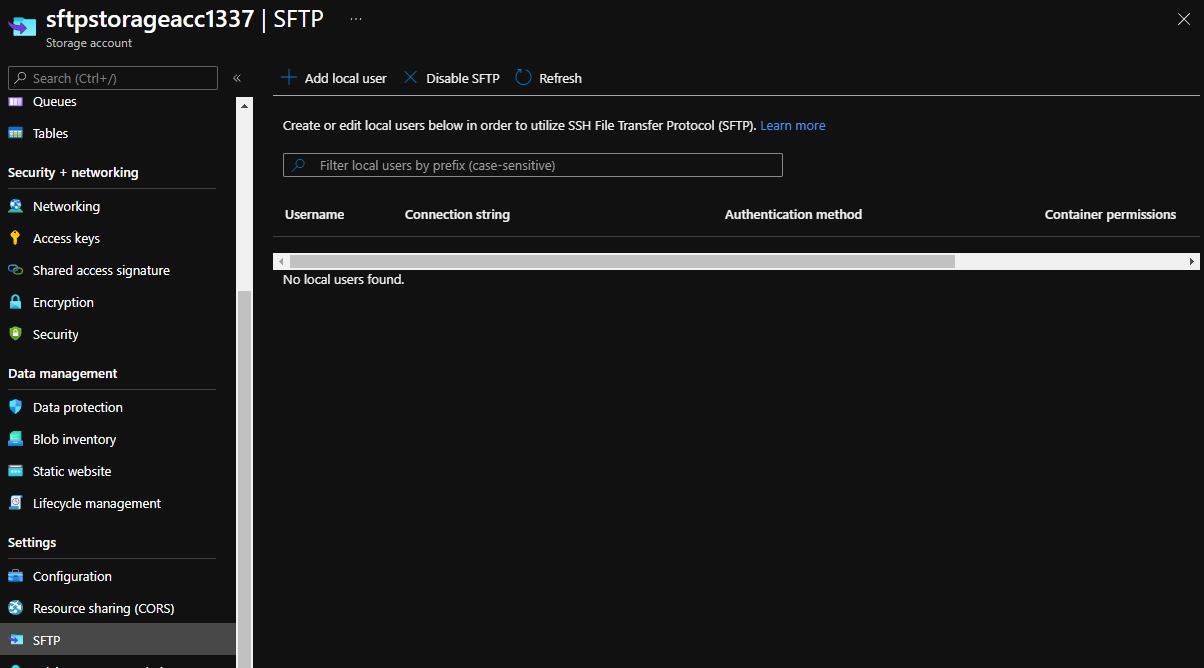

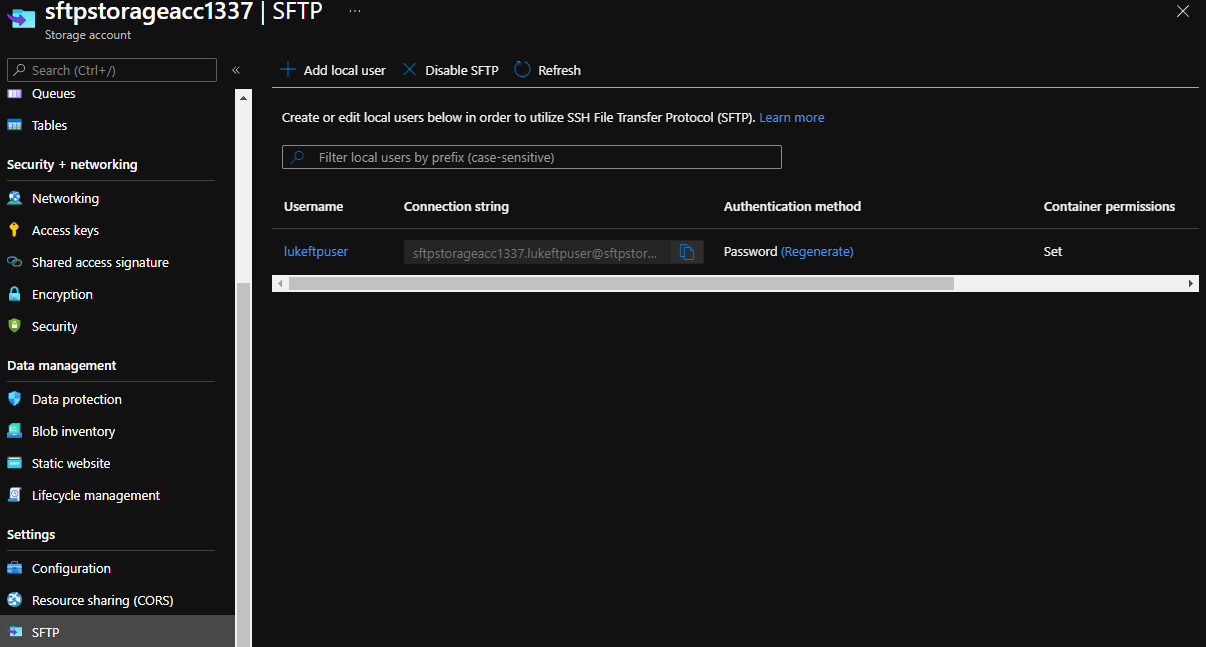

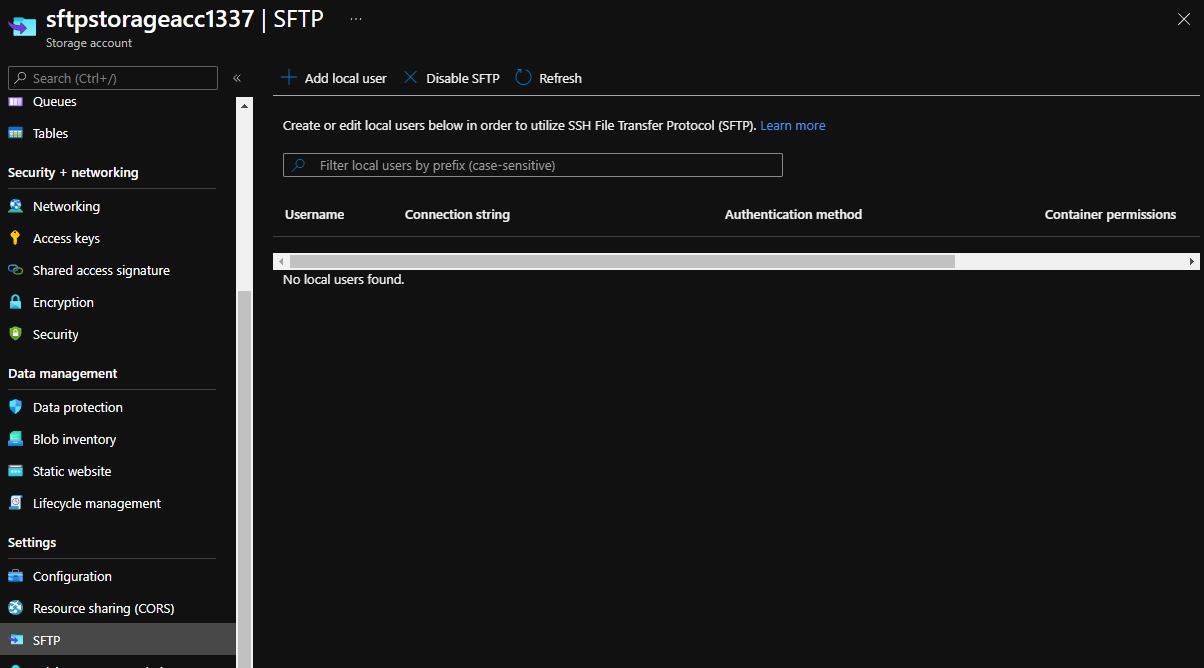

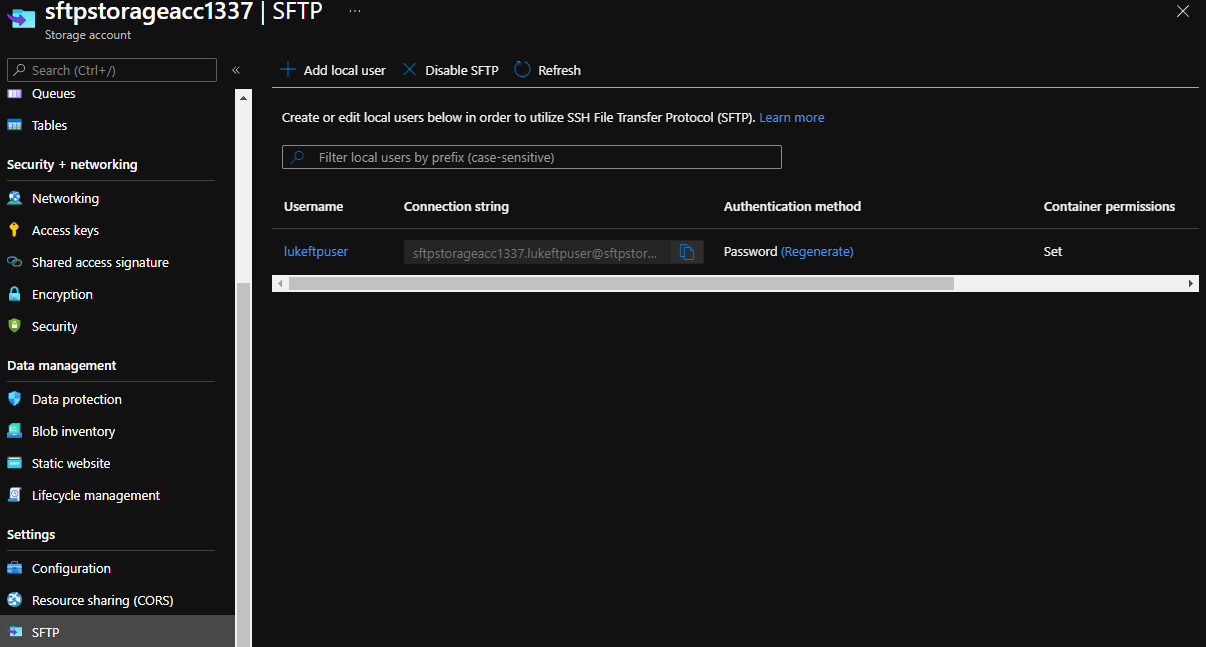

- On the Storage account blade, under Settings, you will see: SFTP

- Click on SFTP and click + Add local user.

- Type in the username of the user you would like to use (remember you can have up to 1000 local users, but there is no integration into Azure AD, Active Directory or other authentication services currently), in my example I will use: lukeftpuser

- You can use either (and both) SSH keys or passwords, in this article - I am simply going to use a password so I select: SSH Password.

- Click Next

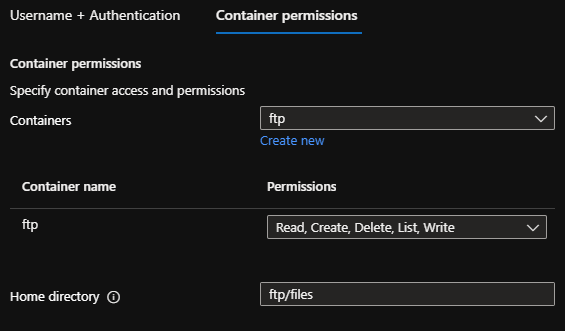

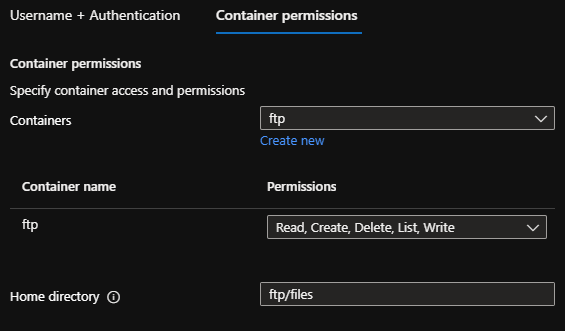

- Our storage account is empty, we now need to create a top-level container, so I will sect Create new and set the name to: ftp

- I will leave the Public access level to Private (no anonymous access)

- Click Ok

- Now that the ftp container has been created, we need to set the permissions, I am simply going to give the permissions of Read, Create, Delete, List and Write. It's worth noting, that if you only need to read or list contents, then that is the only permissions you need, these permissions are for the Container, not the folder, so you may find your users may have permissions to other folders in the same Container if not managed appropriately.

- Now we set the Home directory. This is the directory that the user will be automatically mapped to, this is optional but if you don't have a Home directory filled in for the user, they will need to connect to the appropriate folders when connecting to SFTP manually. The home directory needs to be relative, ie: ftp/files (the container name and the files folder, located in the ftp container).

- Because we specified Password earlier, Azure has automatically created a new password for that account, although you can generate new passwords - you are unable to specify what the Password is, make sure you copy this and store it in a password vault of some kind, the length of the password that was generated for me was: 89 characters.

- You should see the connection string of the user, along with the Authentication method and container permissions.

Test Connectivity via SFTP to an Azure Storage Account

I will test Connectivity to the SFTP Azure Storage account using Windows 11, although the same concepts apply across various operating systems (Linux, OSX, etc.).

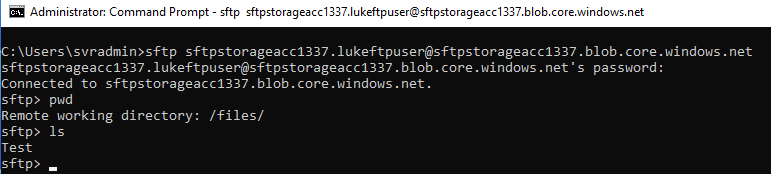

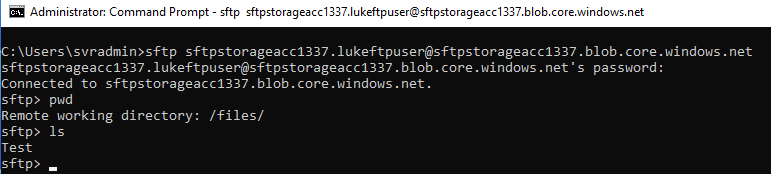

Test using SFTP from Windows using command prompt

-

Make sure you have a copy of the Connection String and user password from the SFTP user account created earlier.

-

Open Command Prompt

-

Type in sftp CONNECTIONSTRING, example below and press Enter:

-

If you get a prompt to verify the authenticity of the host matches (i.e. the name/URL of the storage account matches) and type in: Yes, to add the storage account to your known host's list

-

Press Enter and paste in the copy of the Password that was generated for you earlier.

-

You should be connected to the Azure Storage account via SFTP!

-

As you can see below, I am in the Files folder, which is my users home folder, and there is a file named: Test in it.

-

Once you have connected to SFTP using the Windows command line you can type in: ?

That will give you a list of all the available commands to run, ie upload files etc

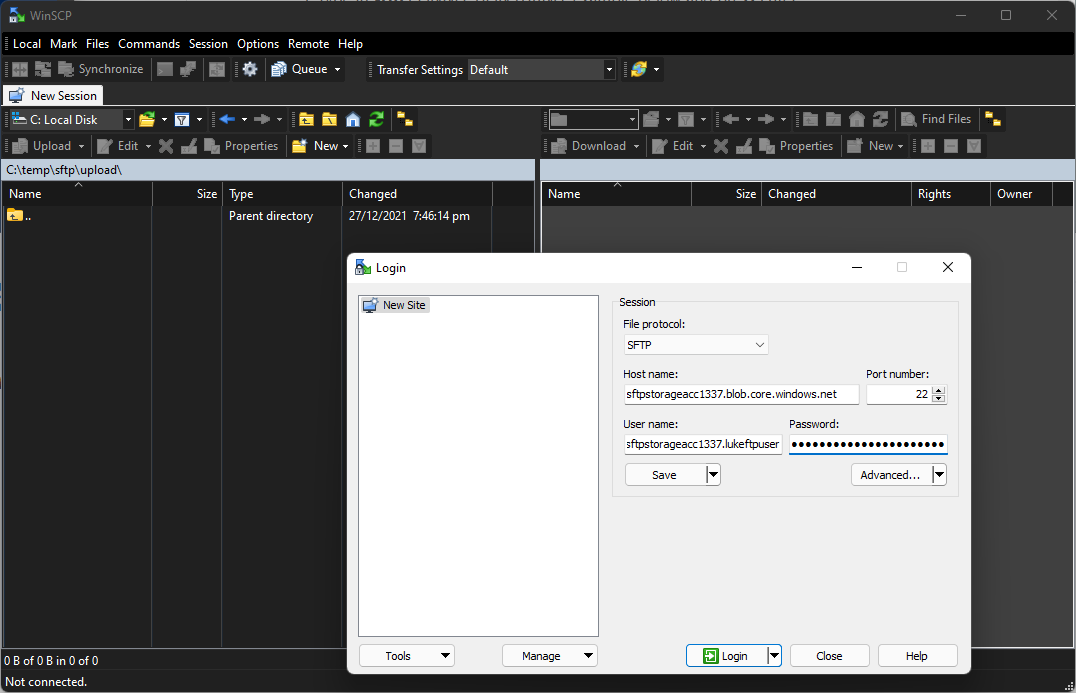

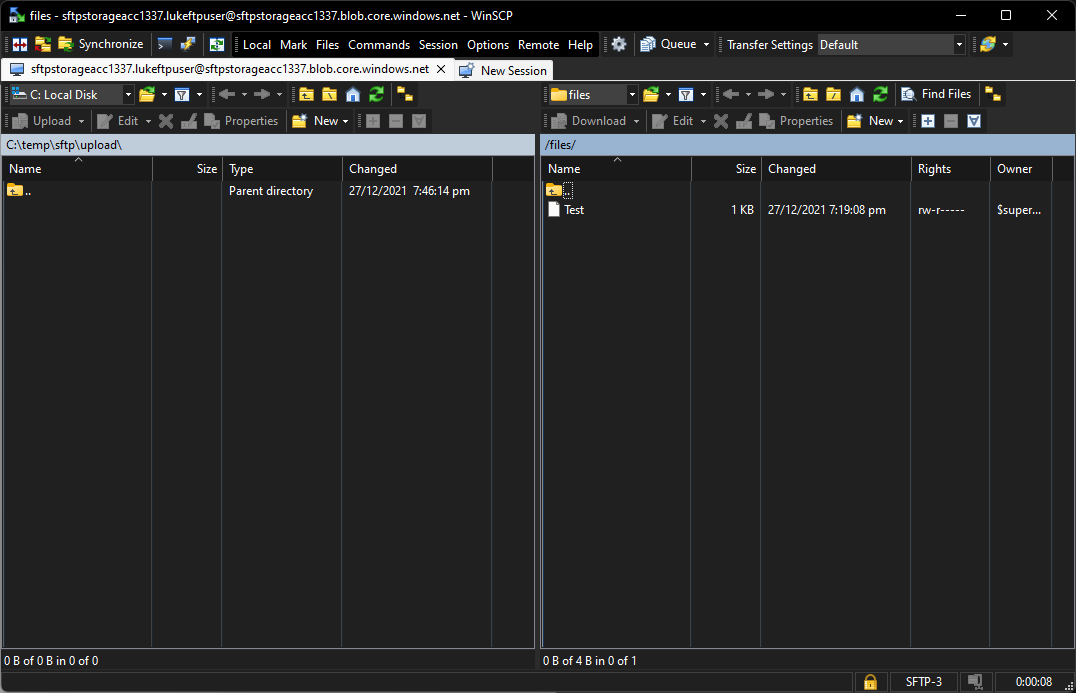

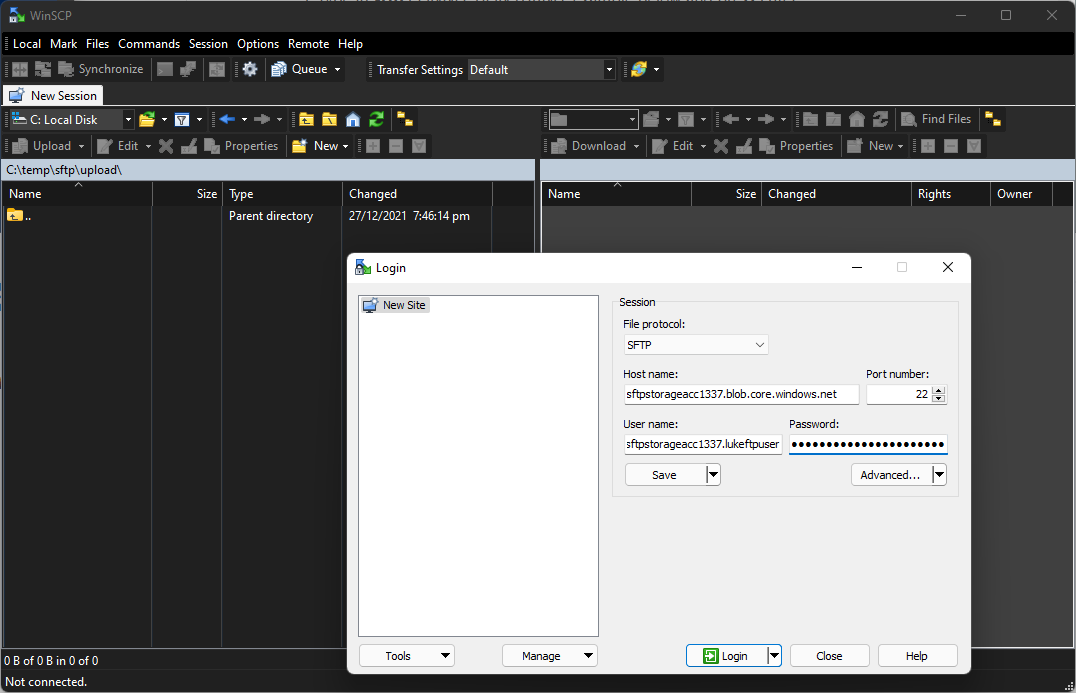

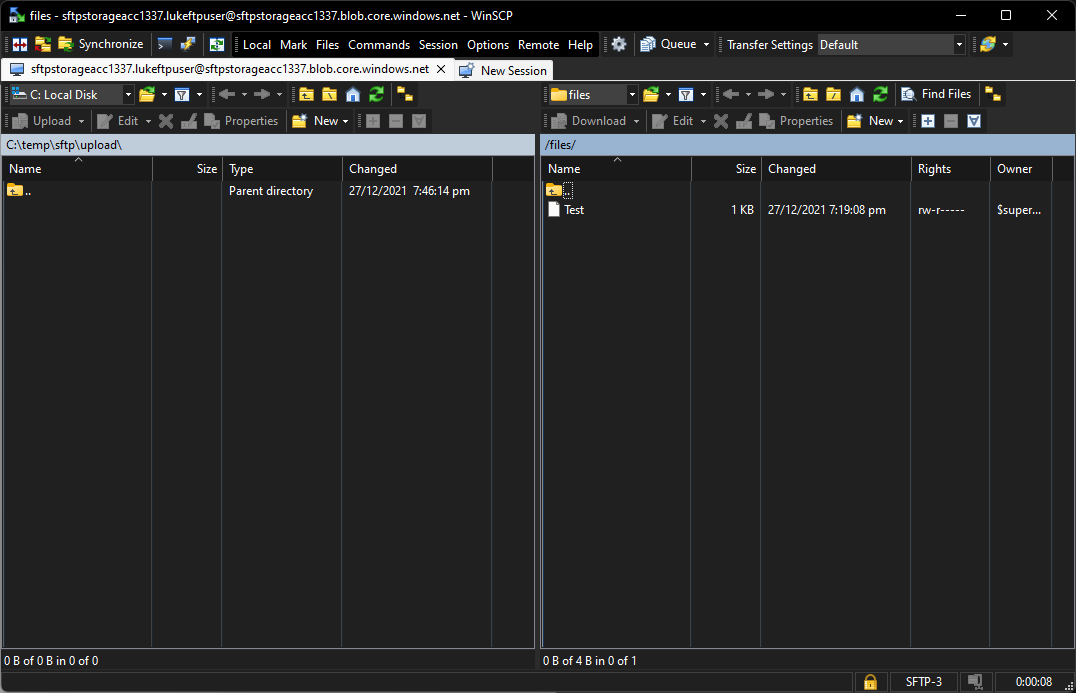

Test using WinSCP

- Make sure you have a copy of the Connection String and user password from the SFTP user account created earlier.

- If you haven't already, download WinSCP and install it

- You should be greeted by the Login page (but if you aren't, click on Session, New Session)

- For the hostname, type in the URL for the storage account (after the @ in the connection string)

- For the username, type in everything before the @

- Type in your Password

- Verify that the port is 22 and file protocol is SFTP and click Login

Congratulations! You have now created and tested Connectivity to the Azure Storage SFTP service!